Paper Review: Unbounded: A Generative Infinite Game of Character Life Simulation

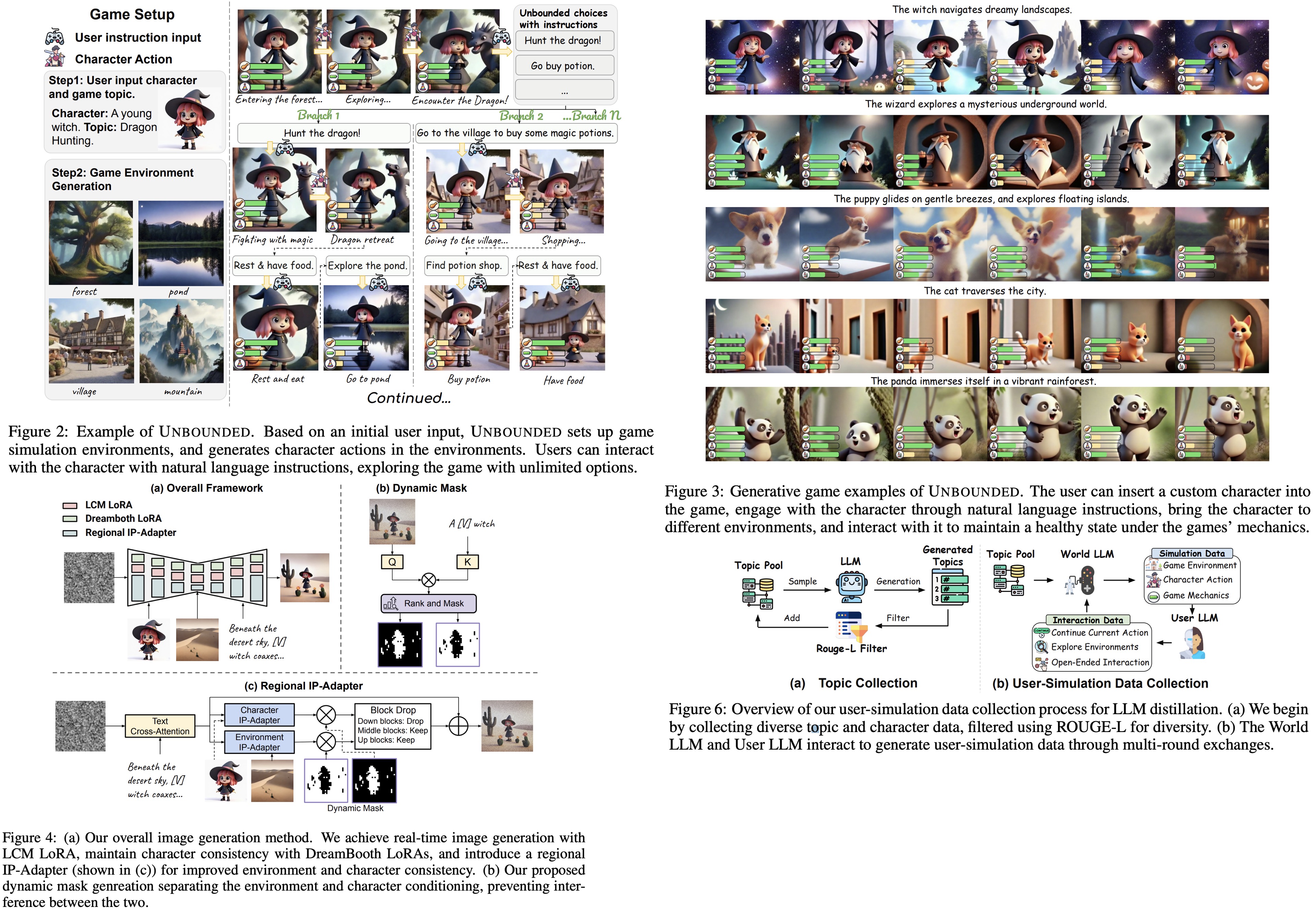

Unbounded is a generative “infinite game” where players interact with an autonomous virtual character in a sandbox life simulation, guided by generative AI models. Unlike traditional games with predefined mechanics, it uses a specialized language model to dynamically create open-ended game mechanics, narratives, and character interactions, some of which are emergent. Additionally, a new image prompt adapter maintains consistent yet adaptable visuals of the character across varied environments. The system shows significant improvements in life simulation, coherence, and visual consistency over conventional methods.

The aproach

Personalization of latent consistency models for character consistency

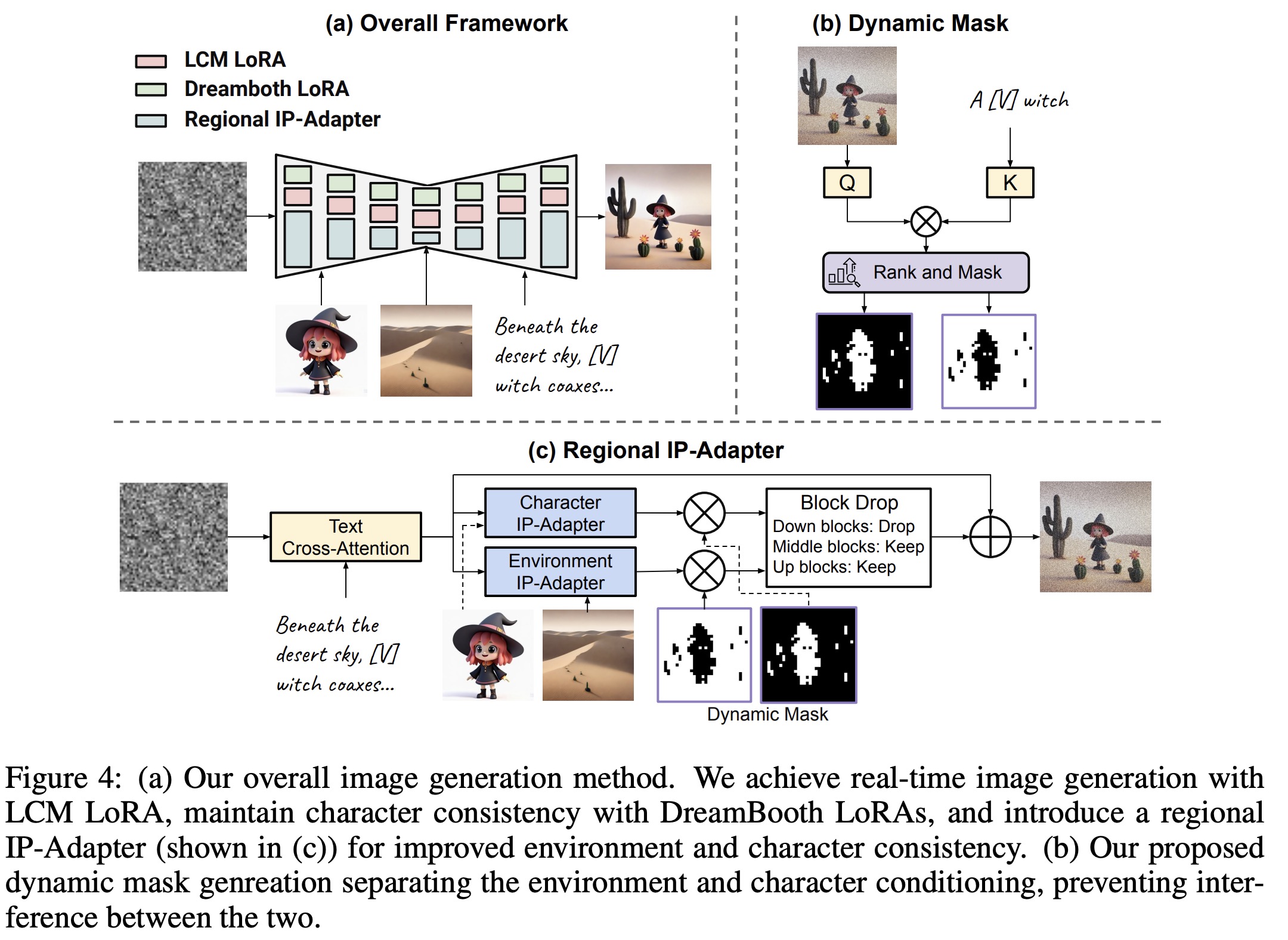

Unbounded enables real-time interaction in a fully generative game using latent consistency models, allowing high-resolution images to be generated in just two diffusion steps, achieving a near one-second refresh rate. Custom characters are supported through DreamBooth, fine-tuning the text-to-image model with LoRA modules. A unique identifier is added to mark the character, and subject-specific LoRA is merged with LCM LoRA to maintain both speed and character fidelity. This approach ensures strong character preservation, critical for a seamless, interactive gaming experience.

Regional ip-adapter with block drop for environment consistency

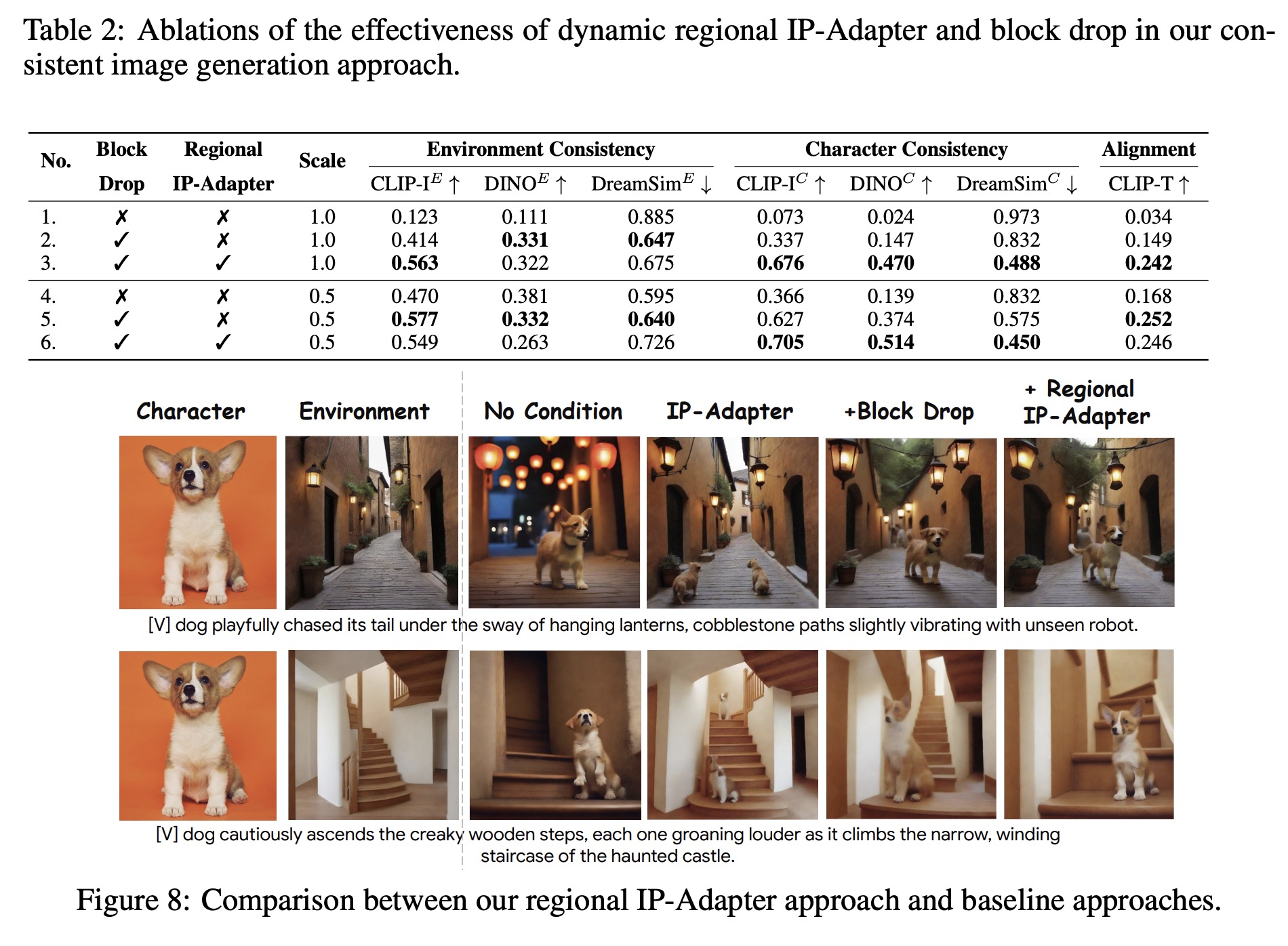

To address challenges of environment consistency and accurate character placement, a new regional IP-Adapter with dual-conditioning on subjects and environments is introduced. Unlike standard IP-Adapters, this version includes a dynamic regional injection mechanism, allowing separate conditioning for character and environment. By using a mask-based approach with cross-attention layers, the regional IP-Adapter dynamically adjusts attention for character and environment, preserving each’s integrity.

This design separates character and environment generation across sampling blocks: it applies only in mid and up-sample blocks, focusing on spatial layout in down-sample blocks, which aligns background with the prompt without compromising character details like appearance and poses.

Language model game engine with open ended interactions and integrated game mechanics

Unbounded enables character simulation in pre-defined environments, allowing users to interact through natural language and perform open-ended actions. Key challenges include binding characters to environments based on user instructions, generating coherent narratives aligned with character traits, managing game mechanics like character states (hunger, energy, fun, hygiene), and rewriting prompts for accurate visual generation. To overcome latency issues with large models like GPT-4, Unbounded distills these capabilities into a smaller model, based on Gemma-2B, supporting real-time interaction.

The character life simulation game in Unbounded uses two large LLM agents: a world simulation model that sets up environments, generates narratives, tracks character states, and simulates behavior, and a user model that manages player interactions, including storytelling, environment changes, and character actions. In each interaction, the user can describe the character or the actions, which, in turn, guides narrative generation. This dynamic interaction enables open-ended narratives and character experiences.

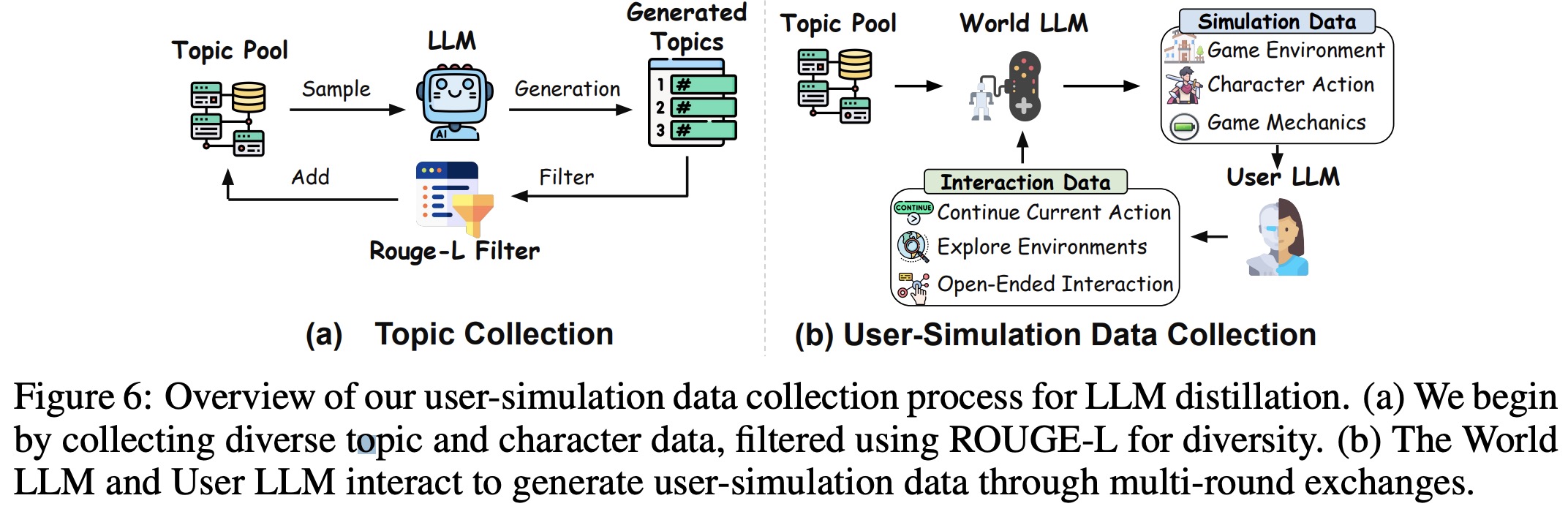

To enable efficient real-time interaction, Unbounded distills these capabilities from larger LLMs into a smaller model, Gemma-2B, through synthetic data generated by the stronger models. The authors collected 5,000 unique topic-character pairs and multi-round interactions between the simulation and user models.

Experiments

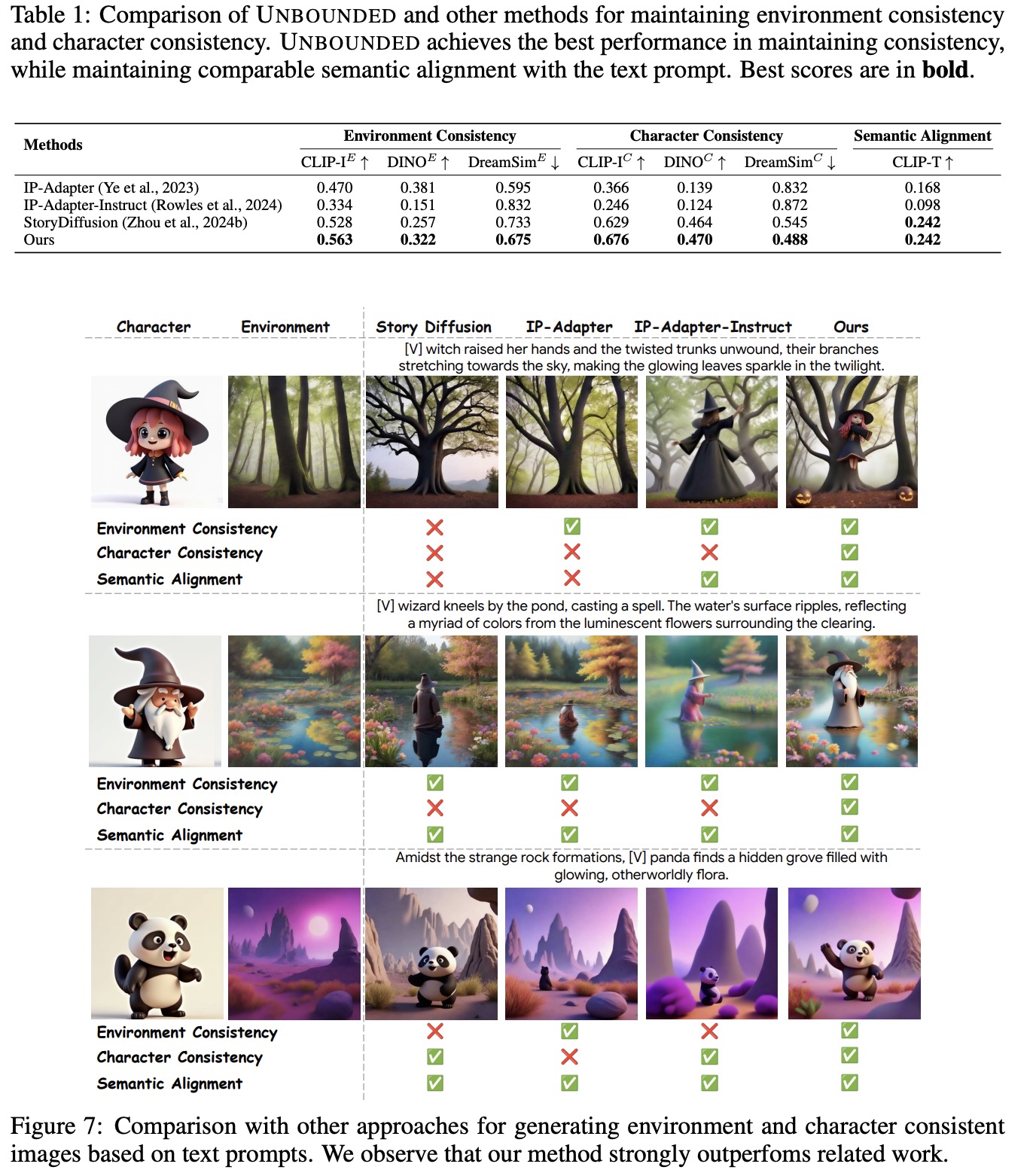

The regional IP-Adapter with block drop in Unbounded outperforms previous methods in maintaining both environment and character consistency. Additionally, it achieves comparable performance in semantic alignment, indicating strong adherence to text prompts. Qualitative comparisons further highlight that the regional IP-Adapter consistently generates characters with stable appearances and accurately matches environments, while other methods may falter in character inclusion or environment consistency.

The regional IP-Adapter with block drop is crucial for placing characters accurately in environments based on text prompts, maintaining both environment and character consistency. Ablation studies show that block drop improves both environment and character consistency, with notable increases in CLIP-IE and CLIP-IC scores. Using a smaller environment IP-Adapter scale improves character consistency but slightly reduces environment accuracy.

Qualitative results indicate that block drop enhances text prompt adherence and layout, with the dynamic mask scheme further boosting character consistency while keeping the environment context intact. Additionally, the distillation of Gemma-2B into a game engine is effective, outperforming smaller LLMs and nearly matching GPT-4o in game world and character action simulation. Results show that a larger dataset (5K samples) improves performance, suggesting further gains with additional data.

paperreview deeplearning nlp llm simulation