Paper Review: Connecting Large Language Models with Evolutionary Algorithms Yields Powerful Prompt Optimizers

EvoPrompt is a new framework that uses evolutionary algorithms EAs to automate the process of creating prompts for LLMs. Traditional LLMs require manually crafted prompts which can be labor-intensive. By integrating EAs with LLMs, EvoPrompt can generate coherent and readable prompts without needing gradients or parameters. Starting with a set of initial prompts, it iteratively creates and refines prompts using evolutionary operators and the LLMs. When tested on both closed- and open-source LLMs across 9 datasets, EvoPrompt showed a significant improvement over human-made prompts and other auto-prompt generation methods.

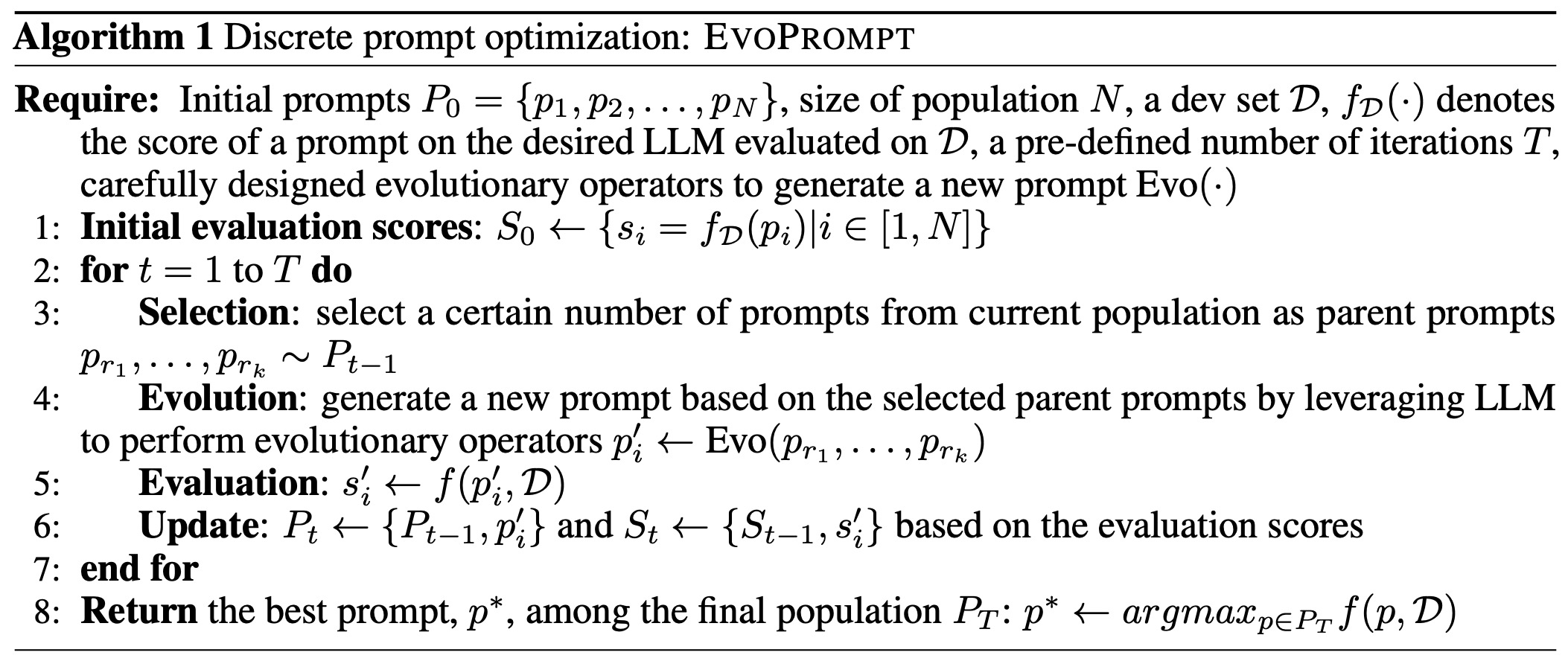

Automatic discrete prompt optimization

Advanced LLMs are usually used as black-box systems, making it hard to access their gradients and parameters. Evolutionary algorithms are efficient optimization methods known for their accuracy and quick convergence. The challenge with using EAs for discrete prompt optimization is that their evolutionary operators edit individual elements without considering their relationship, making it difficult to produce coherent prompts. To solve this, EvoPrompt was introduced, combining LLMs’ language expertise with EAs’ optimization. For practical implementation, the EvoPrompt framework was paired with two popular EAs: the Genetic Algorithm and Differential Evolution.

Framework of EvoPrompt

EvoPrompt operates in three main steps to optimize the generation of prompts for LLMs:

- Initial Population: EvoPrompt starts with an initial set of prompts that includes both manually created ones to take advantage of human expertise and prompts generated by LLMs to ensure diversity. This aims to avoid getting stuck in local optimums.

- Evolution: During each iteration, EvoPrompt uses LLMs to create new prompts by selecting parent prompts from the current population. The specific mutation and crossover steps are carefully designed for each type of EAs, and LLMs are guided with instructions to produce new, coherent prompts.

- Update: After generating new prompts, their performance is evaluated using a development set. The best-performing prompts are kept, emulating the concept of “survival of the fittest” in natural selection.

The algorithm continues iterating until a predefined number of iterations is reached. Depending on the EA type used, the evolutionary and updating processes might need adjustments. The main challenge lies in designing the evolutionary operators for discrete prompts.

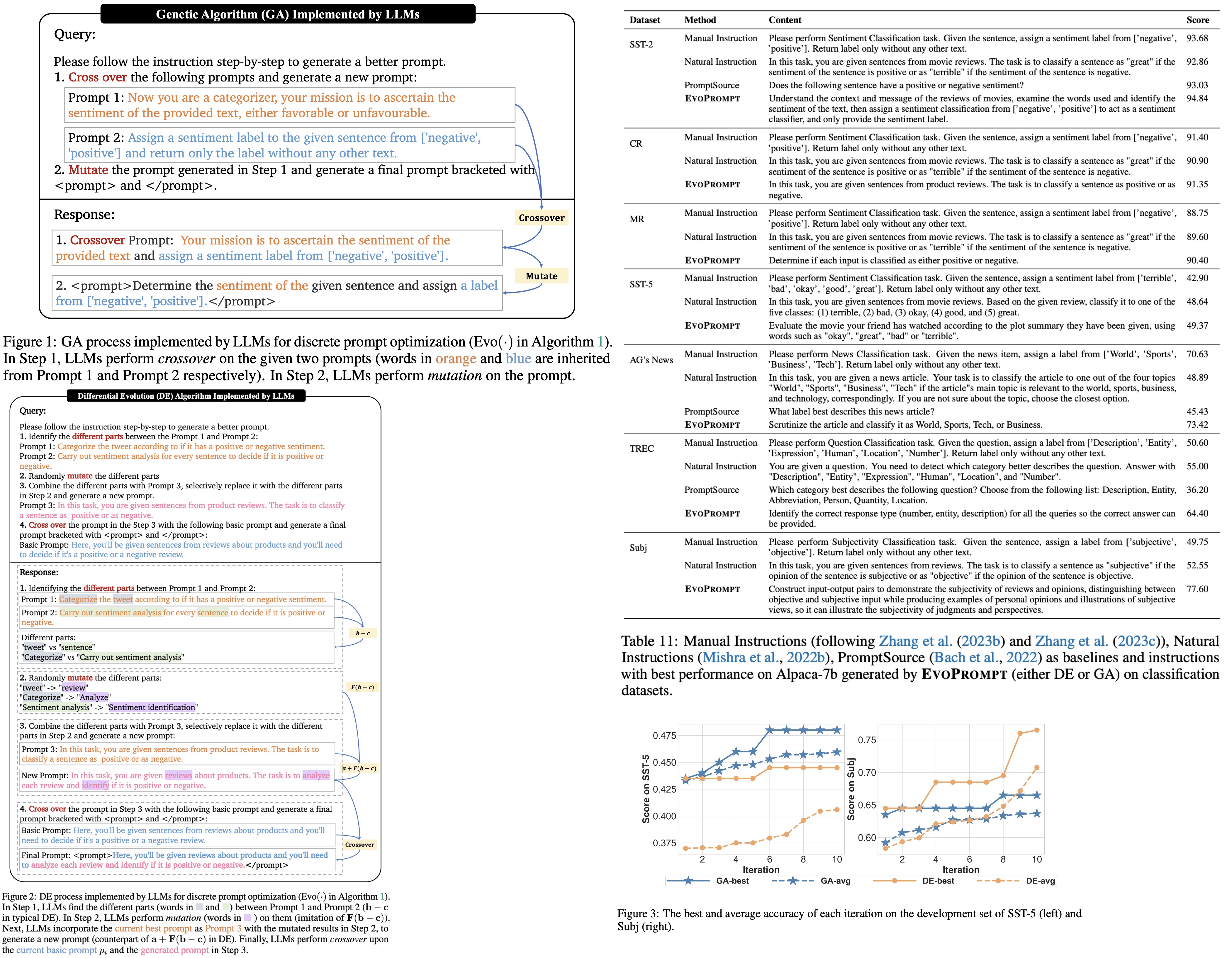

Instantiation with genetic algorithm

In the EvoPrompt framework using the Genetic Algorithm GA, the process comprises the following main steps:

- Selection: Parent prompts are selected from the current population using the roulette wheel selection method based on their performance scores on a development set. The probability of a prompt being chosen as a parent is proportional to its performance score divided by the sum of the scores of all prompts.

- Evolution: A new prompt is generated through a two-step process. Crossover: The selected parent prompts are combined to produce a new prompt that takes components from both. Mutation: Random changes are made to the new prompt generated from the crossover step.

- Update: New candidate prompts are evaluated using a development set to obtain quality scores. Each iteration produces ‘N’ new prompts that are added to the current population, also of size ‘N’. The updated population is then filtered to retain the ‘N’ highest-scoring prompts.

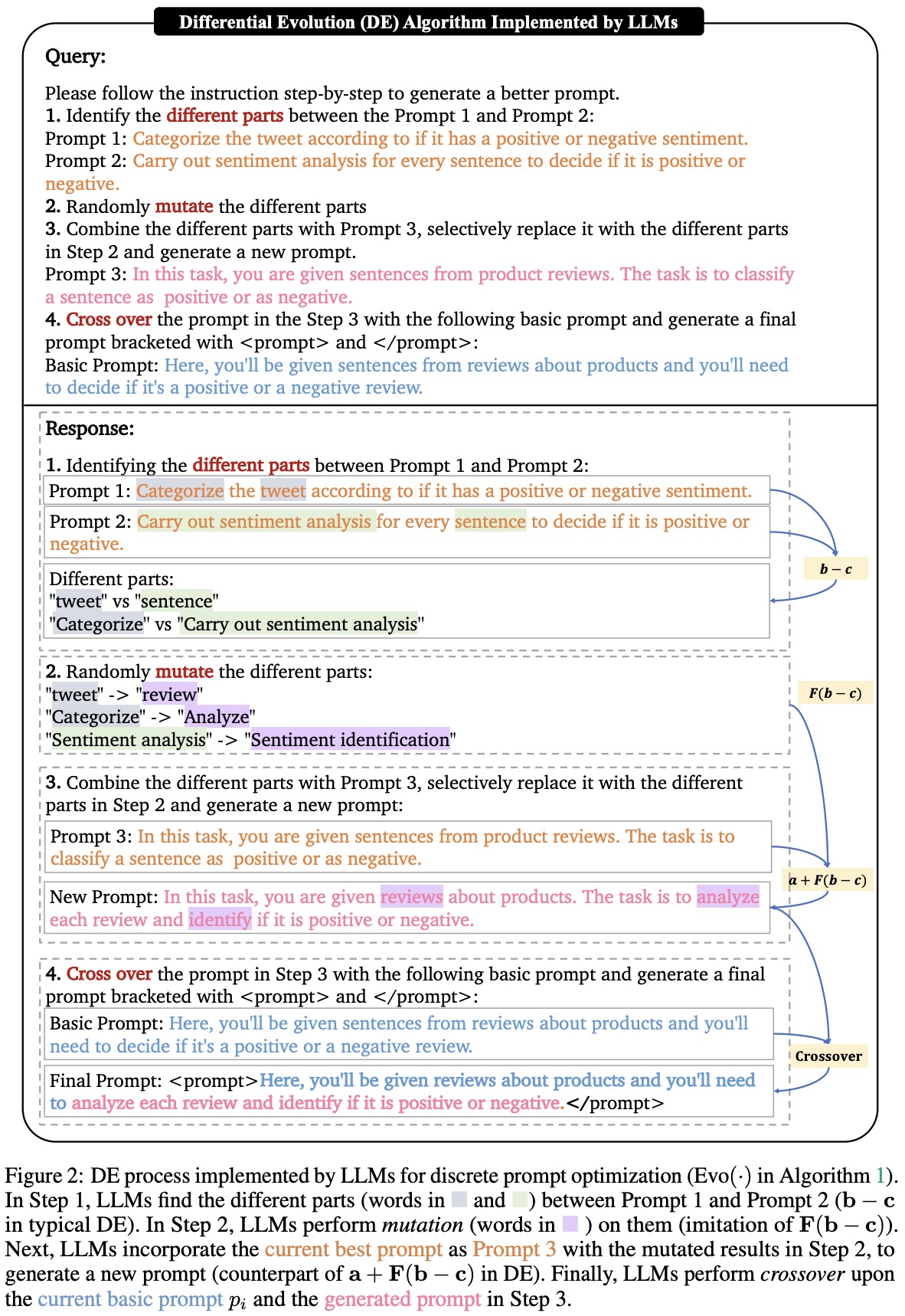

Instantiation with differential evolution

In the context of EvoPrompt, Differential Evolution is adapted to optimize the generation of prompts. DE typically represents solutions as numerical vectors and uses mutation and crossover steps for optimization.

Evolution:

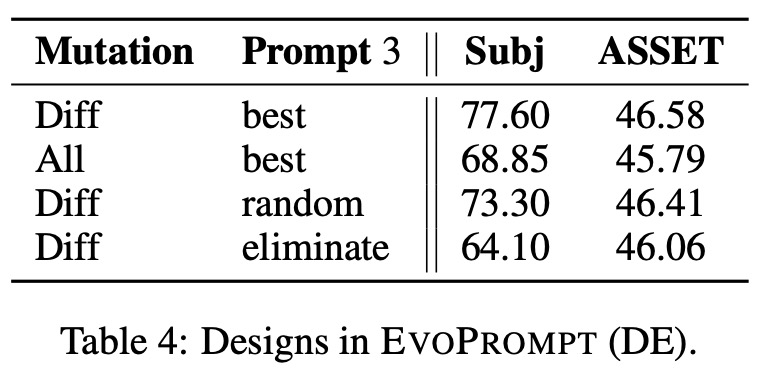

- Mutation: Inspired by DE, EvoPrompt mutates only the differing parts of two randomly selected prompts from the current population, preserving the shared components that tend to have a positive impact. Additionally, a variant of DE uses the best current vector in the mutation process; similarly, EvoPrompt can replace parts of the current best prompt with mutated components.

- Crossover: In DE, crossover is the process of blending two vectors. In EvoPrompt, crossover involves replacing specific components of a current candidate prompt with segments from a mutated prompt, potentially creating a more effective new prompt.

Following standard DE procedures, each prompt in the current population is picked to generate a new prompt. The prompt with the higher score, either the original or the new one, is retained in the population. The overall aim is to maintain a constant population size while improving its overall quality.

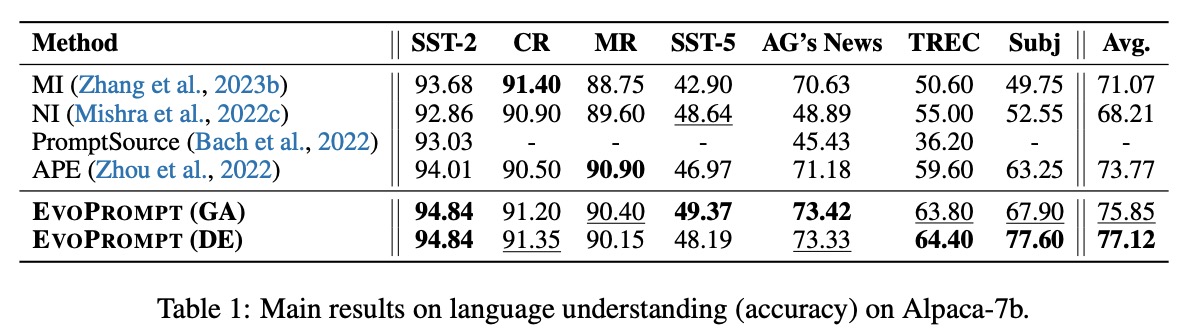

Experiments

- Language Understanding: EvoPrompt significantly outperforms both previous automatic prompt generation methods and human-written instructions.

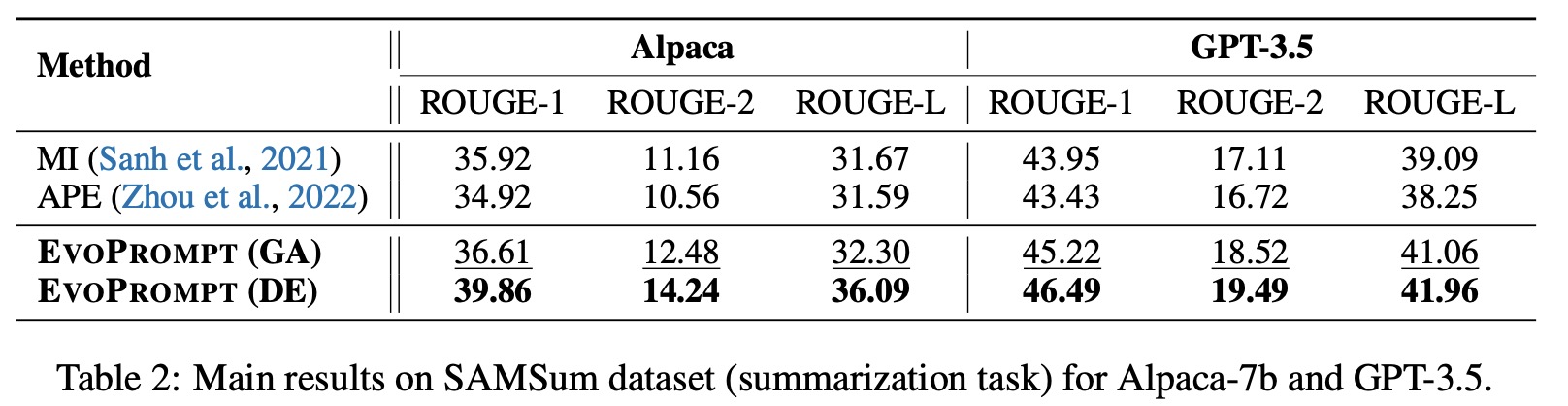

- Language Generation: EvoPrompt significantly outperforms both manually designed prompts and prompts generated by APE across different models like Alpaca-7b and GPT-3.5. For text summarization tasks, DE performs significantly better than GA, while in text simplification tasks, DE and GA perform comparably.

- Design Aspects: When mutation is confined only to the different parts between two selected prompts, it yields better results compared to mutating the entire content.

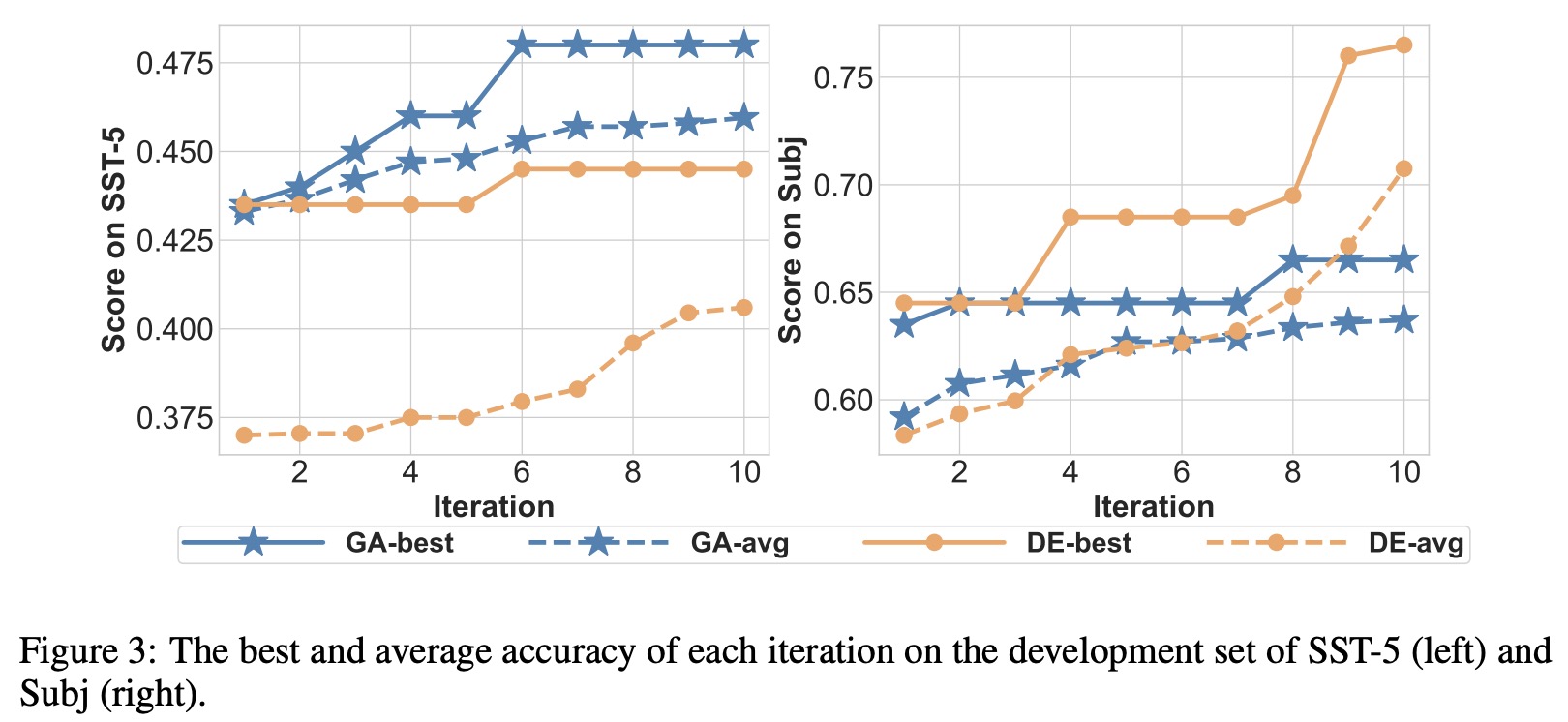

- GA vs. DE: GA is better when high-quality prompts already exist, DE is better when there’s a need to explore beyond local optima or when existing prompts are of lower quality