Paper Review: AlphaEvolve: A coding agent for scientific and algorithmic discovery

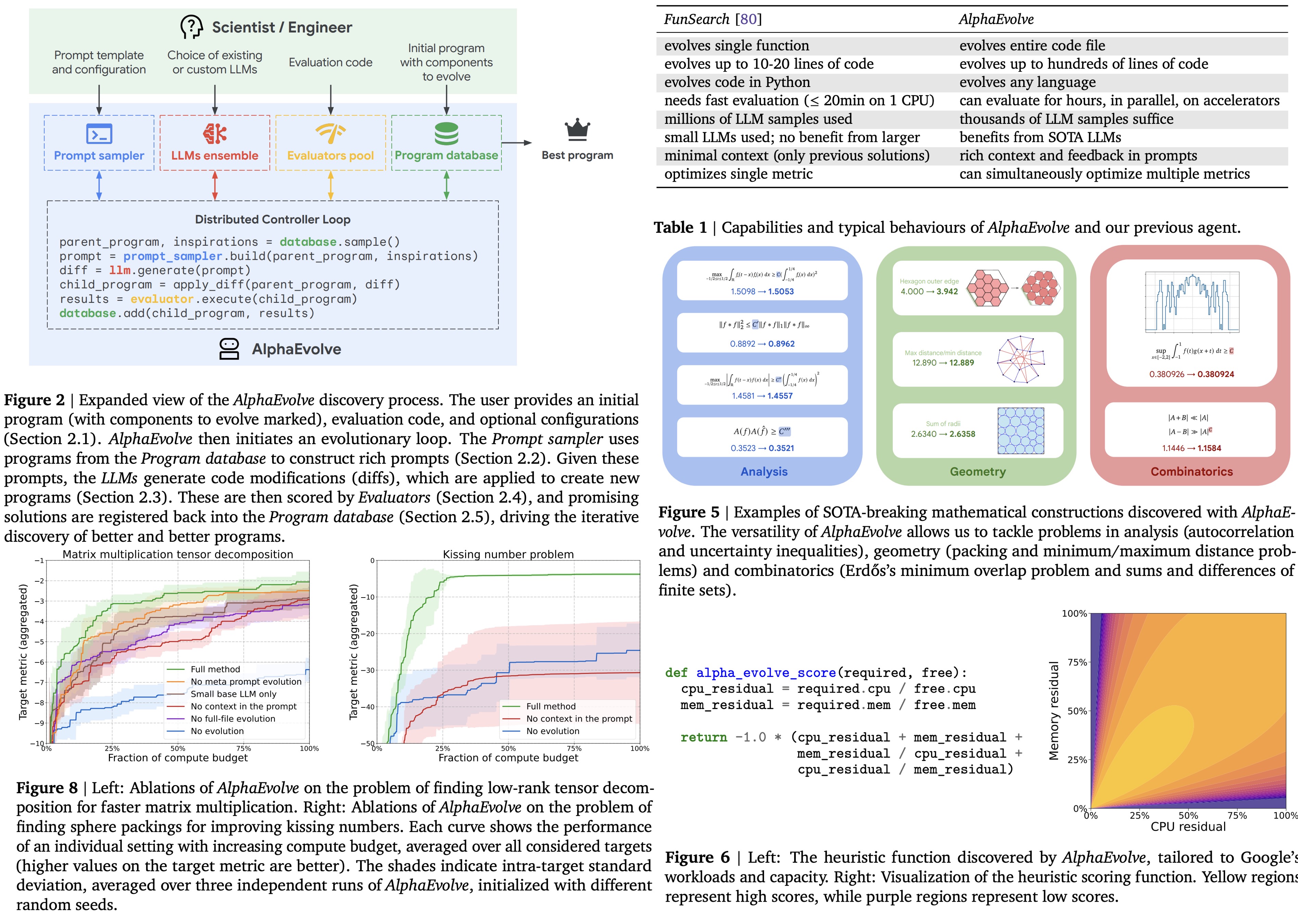

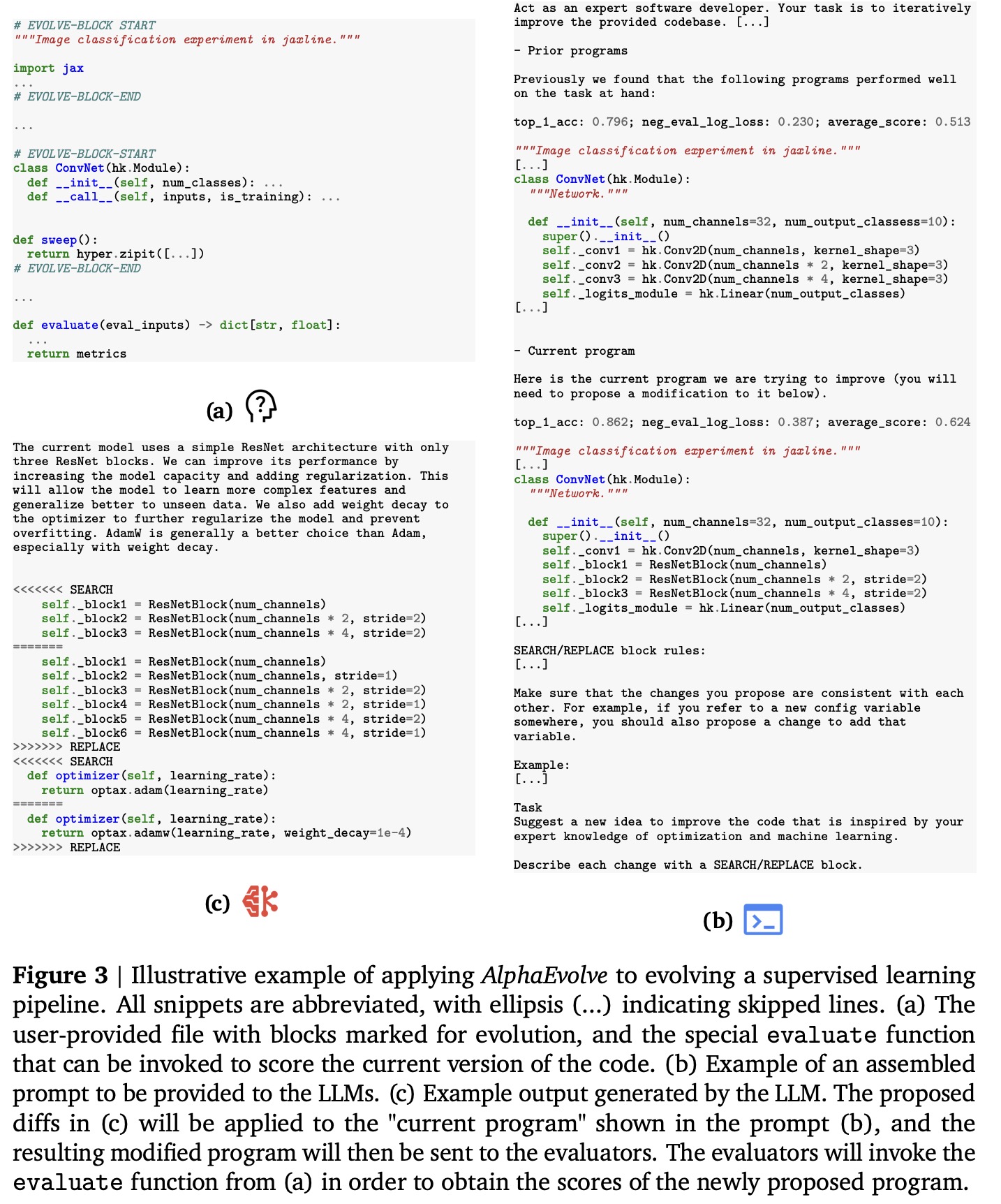

AlphaEvolve is an autonomous evolutionary coding agent designed to enhance the capabilities of LLMs by continuously improving code through iterative edits and evaluator feedback. Its goal is to tackle complex scientific and computational problems by orchestrating a pipeline of LLMs that propose and refine algorithmic solutions.

It has been successfully applied in various domains, including optimizing data center scheduling at Google, simplifying hardware circuit designs, and accelerating the training of the LLMs that power AlphaEvolve. Additionally, AlphaEvolve has discovered new, provably correct algorithms that outperform current state-of-the-art methods in mathematics and computer science. One of its most notable achievements is the discovery of a novel procedure for multiplying two 4×4 complex-valued matrices using only 48 scalar multiplications, marking the first improvement over Strassen’s algorithm in this domain in 56 years.

These results demonstrate the potential of AlphaEvolve and similar coding agents to drive meaningful advances across a broad range of scientific and computational challenges.

The approach

Task specification

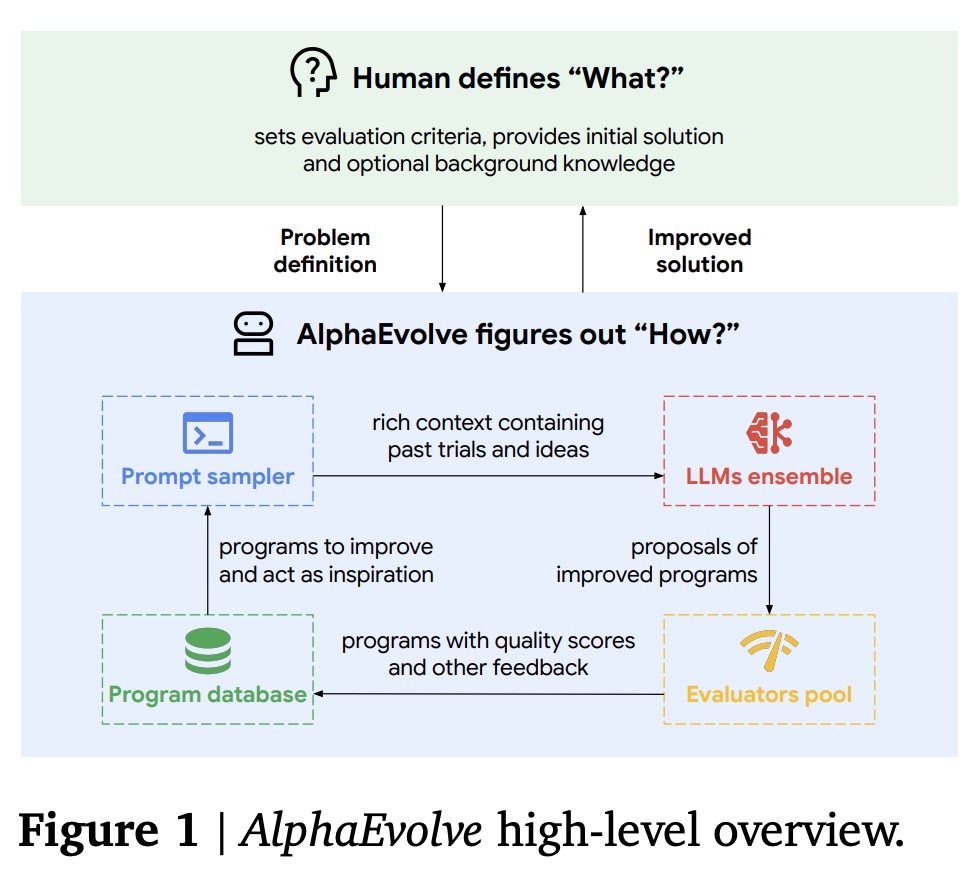

AlphaEvolve requires an automatic evaluation mechanism to assess the quality of generated solutions. This is provided by a user-defined function, which takes a solution and returns a dictionary of scalar metrics to be maximized. For simple problems, such as finding large graphs with specific properties, this function may only check a condition and return a single score. For more complex tasks, it might run search algorithms or train models.

To integrate with existing codebases, AlphaEvolve uses an API where users mark specific code blocks with special comments. These marked blocks serve as the starting point for evolution, while the rest of the code acts as a stable framework that connects the evolved components to the evaluation process.

AlphaEvolve supports multiple abstraction levels for solving a problem. It can evolve raw solution strings, constructor functions that build solutions from scratch, search algorithms that explore solution spaces, or even combinations of intermediate solutions and tailored search strategies. The choice of abstraction depends on the problem - for example, constructor functions are more effective for symmetric problems, while custom search algorithms work better for non-symmetric cases.

Prompt sampling

AlphaEvolve uses state-of-the-art LLMs and supports customization through flexible, long-context prompts. These prompts include previously discovered solutions and system instructions for modifying them. Users can tailor prompts further by adding explicit context (problem descriptions, code, or literature), using stochastic formatting for greater variation, including evaluation results (like program outputs and scores), and enabling meta prompt evolution - where the LLM helps generate and evolve the prompts themselves in a parallel database.

Creative generation

AlphaEvolve uses SOTA LLMs to propose improvements to existing code by analyzing past solutions and generating new variations. Although it is model-agnostic, its performance improves with stronger LLMs. When making code changes, AlphaEvolve typically uses a structured diff format to clearly indicate which code segments to replace and with what. For shorter or fully reworked code blocks, it can instead perform full replacements.

To optimize both speed and quality, AlphaEvolve uses an ensemble of Gemini 2.0 models: Gemini 2.0 Flash for fast, high-volume candidate generation, and Gemini 2.0 Pro for more thoughtful, high-quality suggestions.

Evaluation

AlphaEvolve evaluates each new proposed solution using a user-defined evaluation function, but also supports additional mechanisms to improve flexibility and efficiency. One is an evaluation cascade, where solutions are tested through progressively harder stages, allowing weaker ones to be filtered out early. Another is LLM-generated feedback, which helps assess qualitative properties (like simplicity) that are hard to quantify in the evaluation function. Evaluations can also be parallelized to speed up the process and avoid bottlenecks in generating new candidate solutions.

AlphaEvolve supports optimizing multiple evaluation metrics simultaneously. Even when a single metric is the main focus, adding others often improves performance by encouraging diversity in high-performing solutions. This diversity enriches the prompts given to the LLMs, which in turn helps generate more creative and effective solutions.

Evolution

As AlphaEvolve evolves solutions, it stores them with their evaluation results in an evolutionary database. This database is designed to resurface valuable past solutions in future generations, balancing the need to exploit the best programs while also exploring diverse alternatives. To achieve this, AlphaEvolve uses a hybrid strategy inspired by the MAP Elites algorithm and island-based population models, which helps maintain both performance and diversity in the evolutionary process.

Results

Faster matrix multiplication via finding novel algorithms for tensor decomposition

Matrix multiplication is a core operation in many computational tasks, and finding faster algorithms for it involves discovering low-rank tensor decompositions. Despite decades of effort, even simple cases like multiplying 3×3 matrices remain unsolved in terms of finding the minimal rank.

AlphaEvolve approaches this challenge by starting with a standard gradient-based algorithm and evolving it to produce more effective tensor decomposition methods. It evaluates each evolved program by running it on various matrix multiplication targets, using random initializations and an evaluation cascade. Performance is measured by the lowest rank achieved and how consistently that rank is reached. To ensure precision, evaluations round results to the nearest (half-)integers, and the prompt explicitly encourages near-integral outputs.

AlphaEvolve improved the state of the art on 14 matrix multiplication targets. Its most notable achievement is discovering an algorithm to multiply two 4×4 complex matrices using only 48 scalar multiplications - breaking a 56-year-old barrier and surpassing Strassen’s long-standing 49-multiplication approach for characteristic 0 fields.

These results were obtained through substantial modifications of the initial algorithm, and in some cases, seeding with human-designed ideas further improved outcomes.

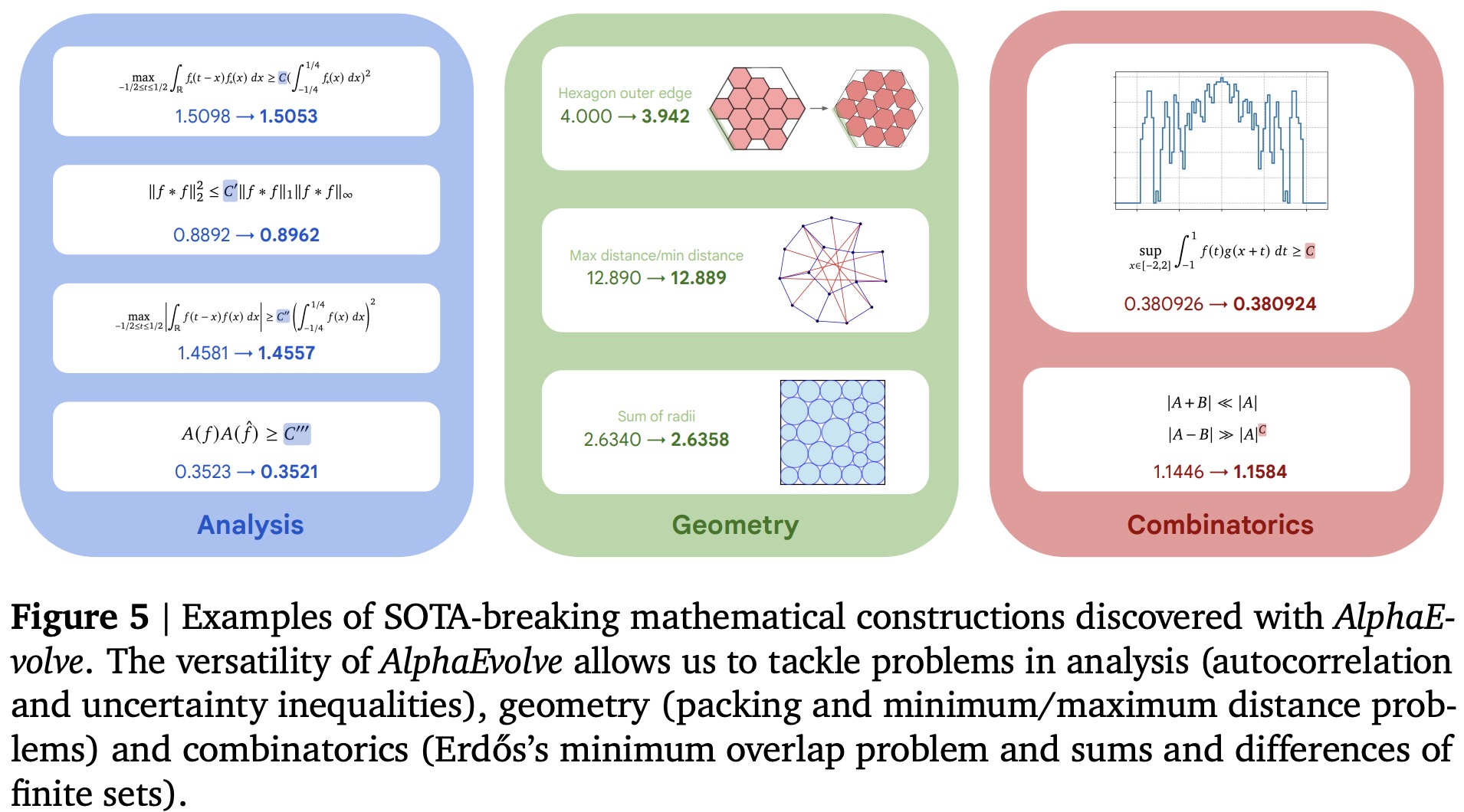

Finding tailored search algorithms for a wide range of open mathematical problems

AlphaEvolve has proven to be a powerful tool for mathematical discovery, capable of finding optimal or near-optimal constructions across a wide range of open problems. These problems span multiple areas of mathematics, including analysis, combinatorics, number theory, and geometry. Applied to over 50 curated problems with various parameter settings, AlphaEvolve was able to rediscover the best-known solutions in 75% of cases and improve upon them in 20%, starting from simple or random constructions.

Rather than directly evolving solutions, AlphaEvolve evolves heuristic search algorithms. Each generation of the system creates a new search program with a fixed time budget, aiming to improve on the best result found so far. This approach naturally leads to multi-stage adaptive search strategies: early heuristics make large gains from simple starting points, while later ones fine-tune near-optimal solutions. This layered, automated discovery process enables AlphaEvolve to outperform traditional, manually crafted methods.

Notable achievements include improved bounds in autocorrelation inequalities and uncertainty principles in analysis, a new upper bound for Erdős’s minimum overlap problem in number theory, and a new record in the 11-dimensional kissing number problem: finding 593 non-overlapping spheres that touch a central one, beating the previous record of 592.

Optimizing Google’s computing ecosystem

Improving data center scheduling

Efficient job scheduling in Google’s data centers is crucial to maximize resource usage and reduce waste from stranded resources—situations where a machine runs out of one resource (like memory) but still has others (like CPU) unused. This complex problem is modeled as a vector bin-packing task, where jobs with CPU and memory demands must be assigned to machines with corresponding capacities.

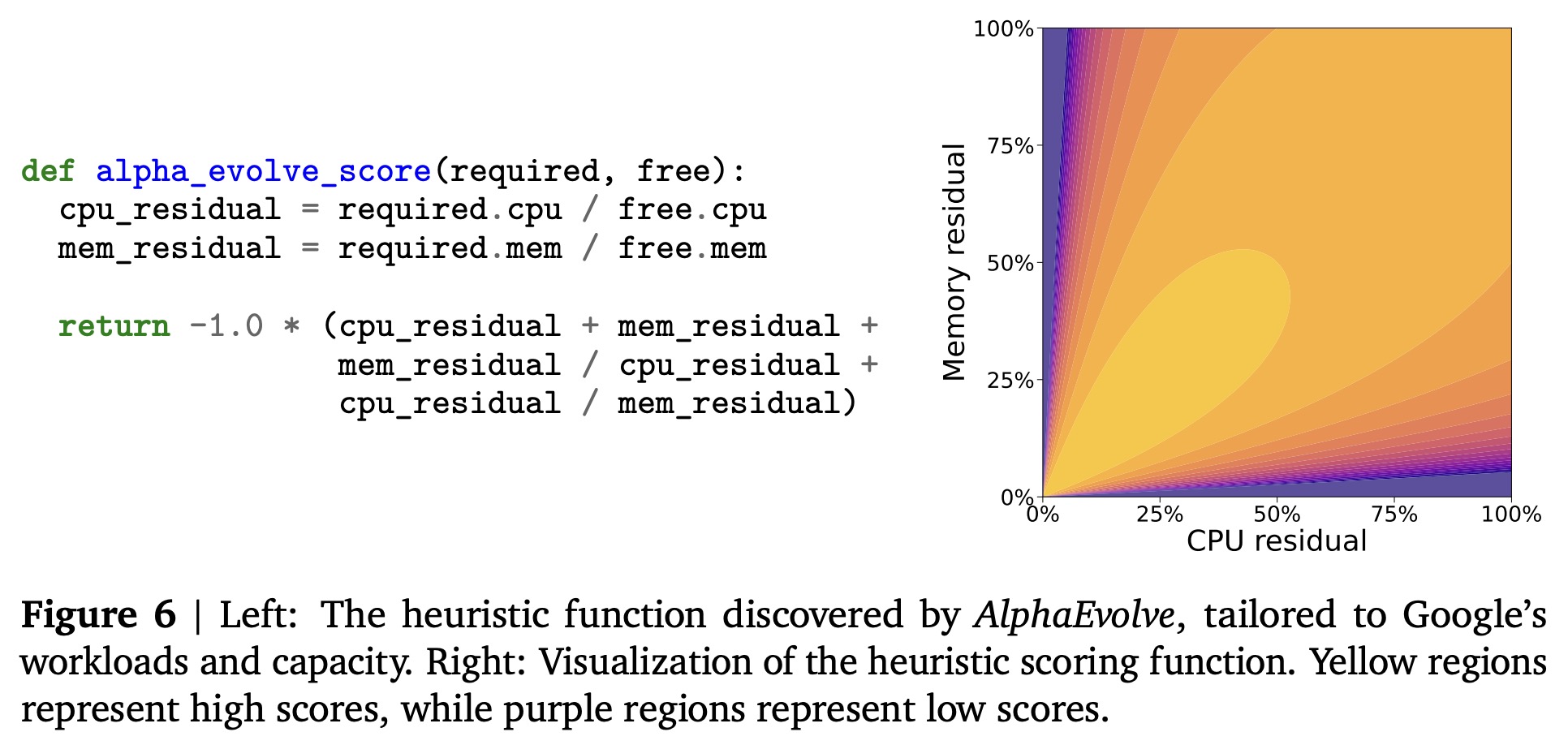

An early version of AlphaEvolve was used to evolve a heuristic function that ranks machines for job assignment based on their available resources. This heuristic integrates into Borg, Google’s scheduler, without overriding its core logic, ensuring correctness.

Using a data center simulator and real workload data, AlphaEvolve iteratively refined this heuristic. The resulting solution outperformed the existing production heuristic and, after validation, was deployed fleet-wide. It now recovers on average 0.7% of Google’s compute resources that would otherwise remain unused. AlphaEvolve was favored over deep reinforcement learning due to its better performance, simplicity, interpretability, and ease of integration into critical infrastructure.

Enhancing Gemini kernel engineering

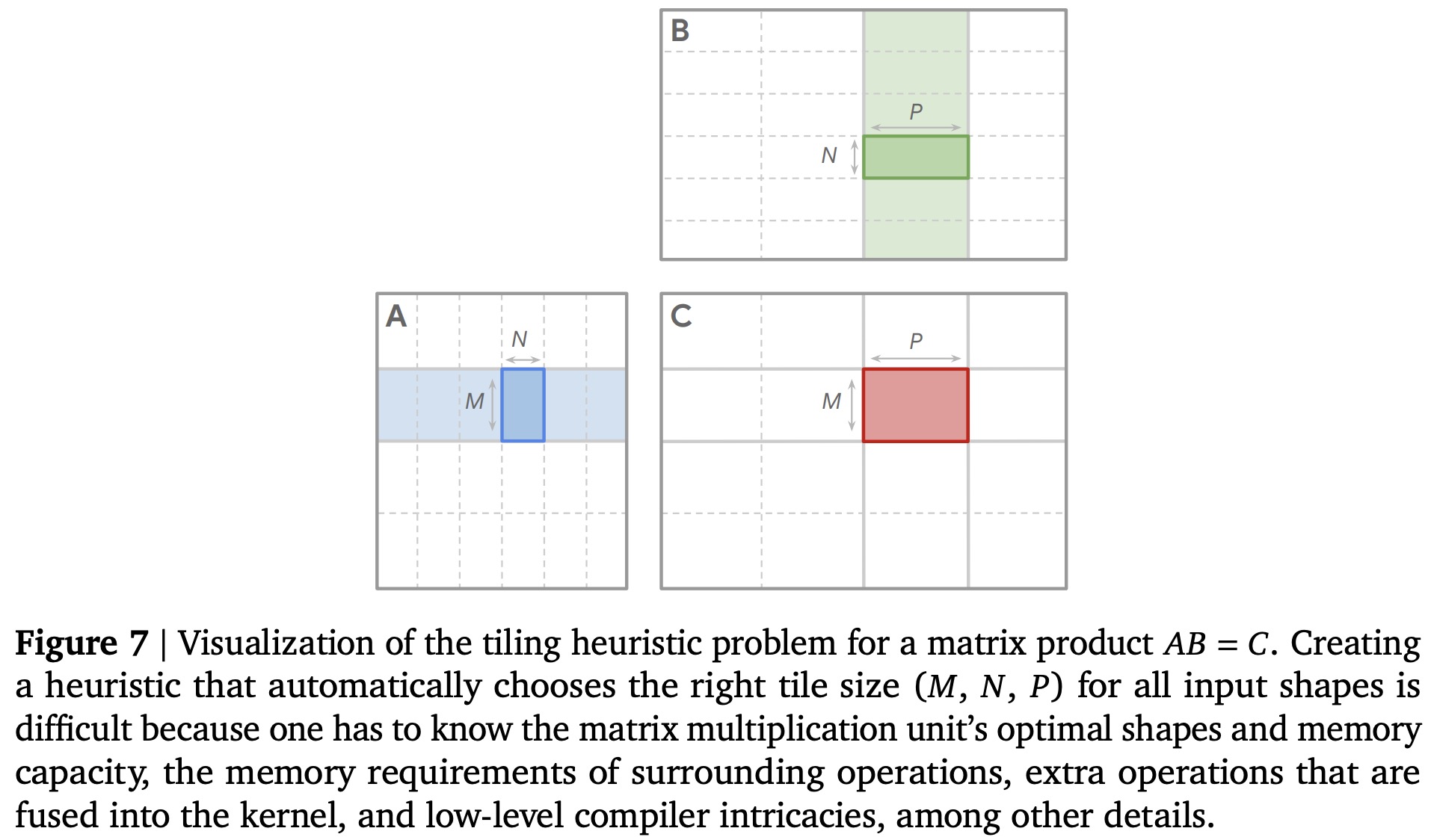

Training large models like Gemini requires efficient kernel execution, especially for matrix multiplication. These operations rely heavily on tiling strategies, which split large computations into smaller chunks to improve performance on hardware accelerators. Traditionally, these tiling heuristics are either manually designed (requiring deep hardware expertise) or autotuned through costly and time-consuming searches.

AlphaEvolve was used to automatically generate and optimize tiling heuristics for a key matrix multiplication kernel in Gemini’s training pipeline. It evolved code that minimizes kernel runtime across a range of input shapes collected from real usage. The resulting heuristic improved average kernel speed by 23% compared to expert-designed versions and reduced Gemini’s total training time by 1%.

This automated approach cut kernel optimization time from months to days, freeing engineers to focus on higher-level tasks. The new heuristic has been deployed in production, directly enhancing Gemini’s training efficiency. Notably, this marks a case where Gemini, via AlphaEvolve, helped optimize its own training process.

Assisting in hardware circuit design

Designing specialized hardware like Google’s TPUs involves complex, time-consuming processes, especially in optimizing Register-Transfer Level code for performance, power, and area. AlphaEvolve was used to optimize a highly refined Verilog implementation of a key arithmetic circuit in the TPU’s matrix multiplication unit. Its goal was to reduce area and power usage while maintaining functional correctness. AlphaEvolve discovered a simple but effective optimization (removing unnecessary bits), which was validated by TPU engineers and would have otherwise only been detected later by synthesis tools.

This improvement, now integrated into an upcoming TPU, marks Gemini’s first direct contribution to hardware design via AlphaEvolve. By generating changes directly in Verilog, AlphaEvolve aligns with industry standards, making its suggestions more usable and trustworthy.

Directly optimizing compiler-generated code

AlphaEvolve was used to optimize the XLA-generated intermediate representations of the FlashAttention kernel. These IRs, used for GPU inference, are typically complex, compiler-generated, and already highly optimized, making direct improvements difficult. AlphaEvolve successfully optimized both the FlashAttention kernel and its associated pre- and postprocessing code. It achieved a 32% speedup in the kernel itself and a 15% improvement in surrounding code. All changes were rigorously validated for correctness using randomized inputs and expert review.

Ablations

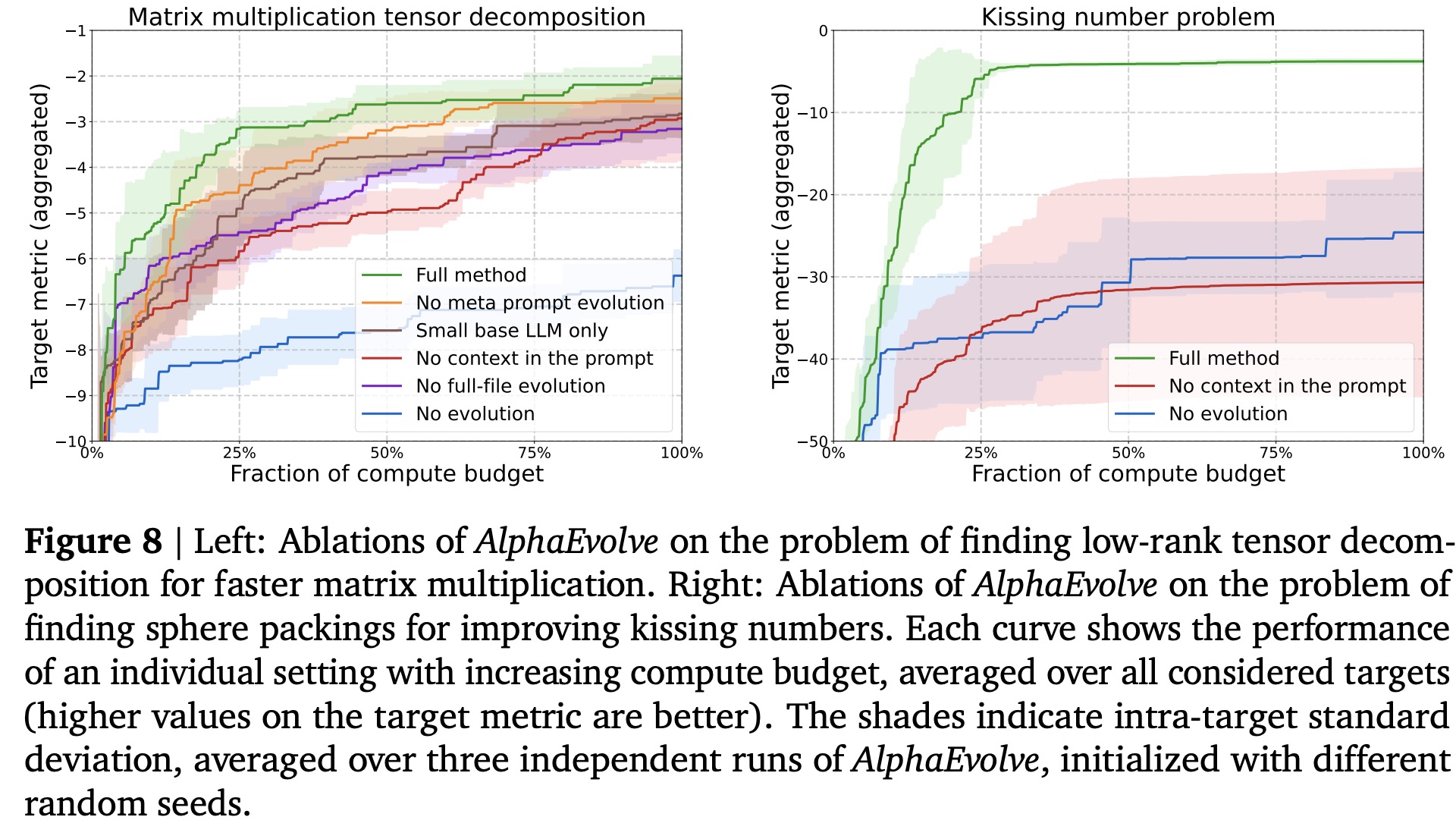

The authors conducted ablation studies on two tasks - tensor decomposition for faster matrix multiplication and computing lower bounds on kissing numbers - to evaluate the impact of key system components.

They tested five simplified variants: removing the evolutionary loop, omitting problem-specific context in prompts, disabling automatic prompt optimization, evolving only part of the code, and using only a small language model.

The results showed that each component contributes significantly to AlphaEvolve’s performance. Removing any of them led to noticeably worse outcomes, highlighting the importance of the evolutionary process, rich context, meta-prompting, full-code evolution, and the use of diverse, powerful LLMs.

paperreview deeplearning agent nlp llm