Paper Review: NitroGen: A Foundation Model for Generalist Gaming Agents

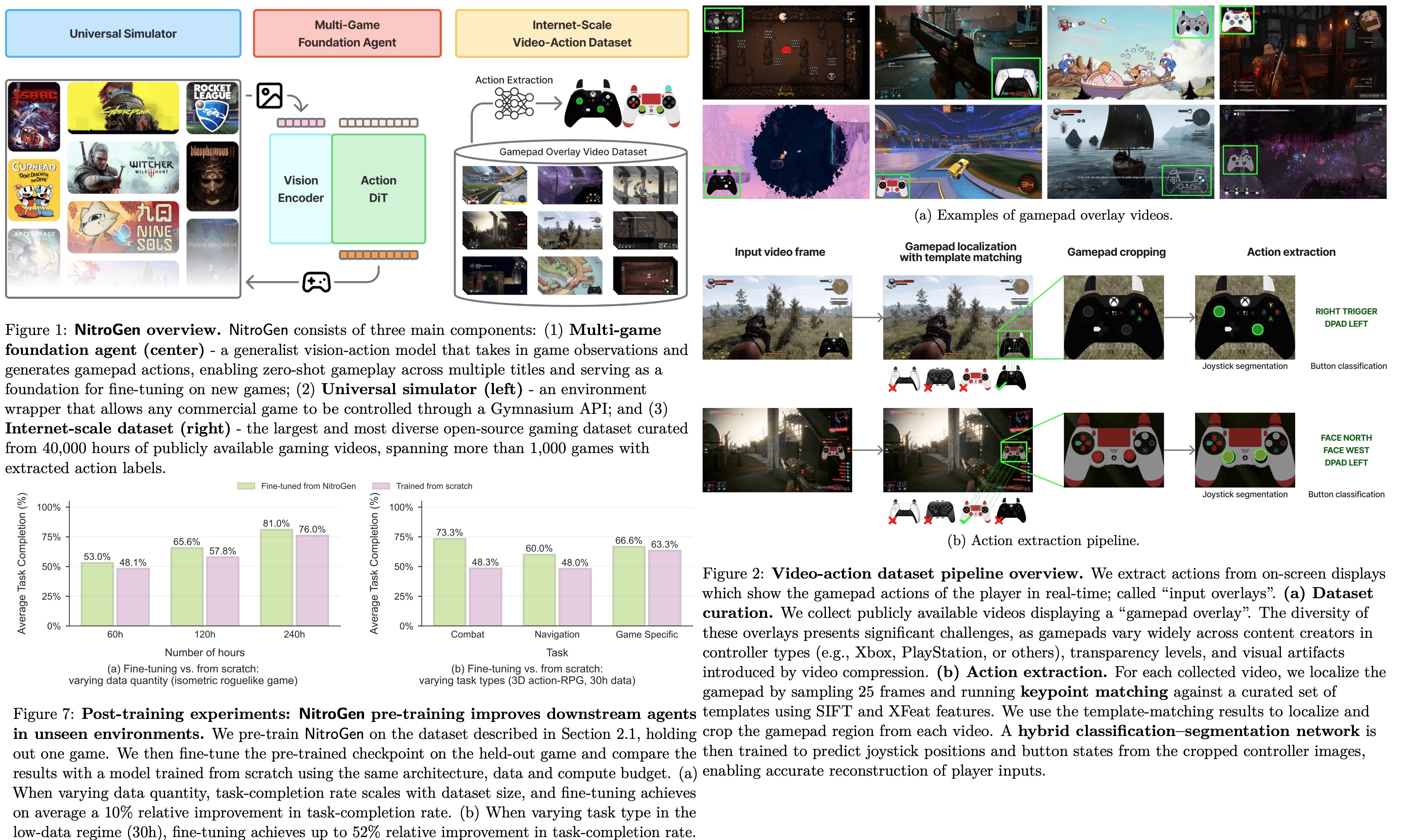

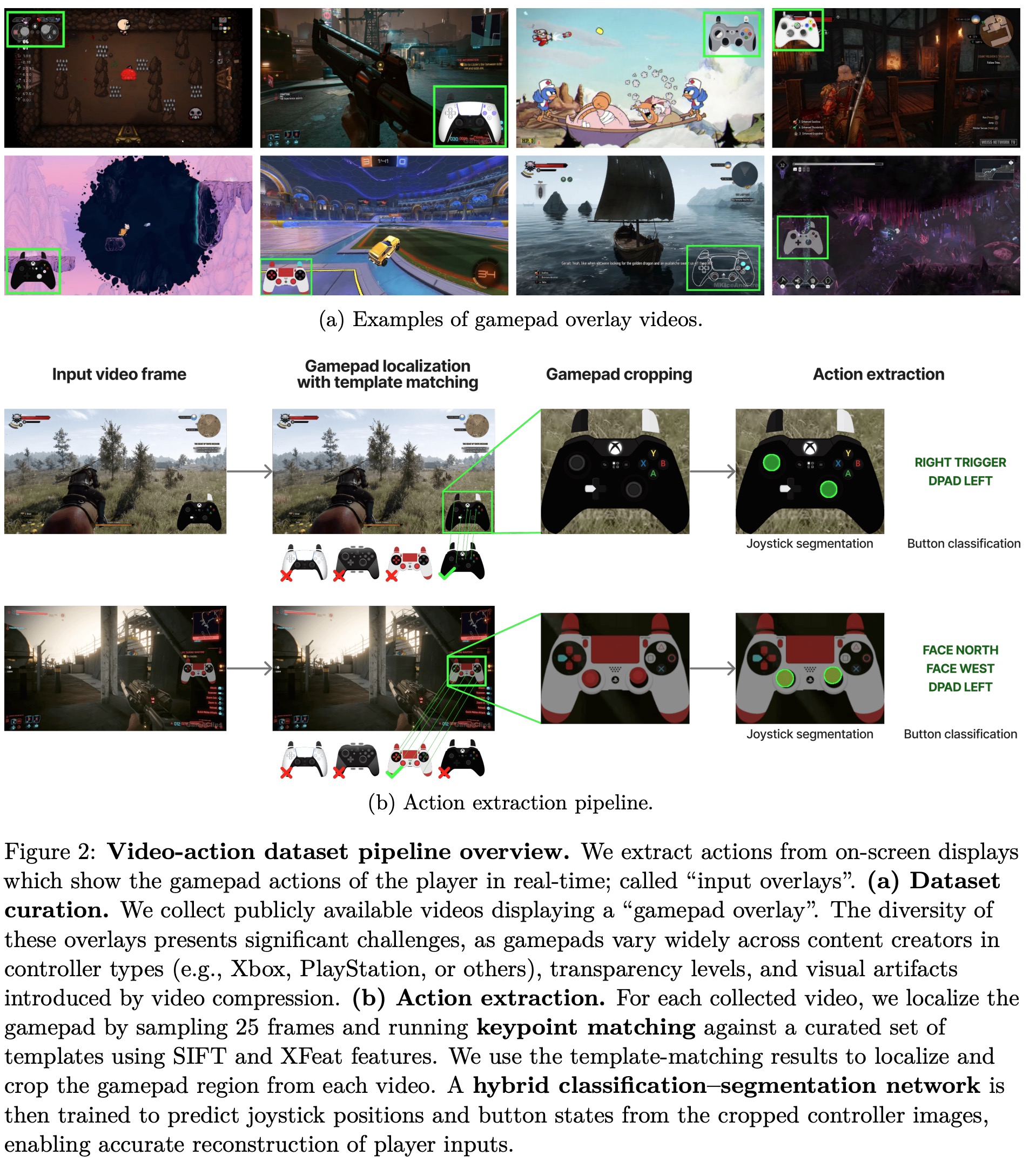

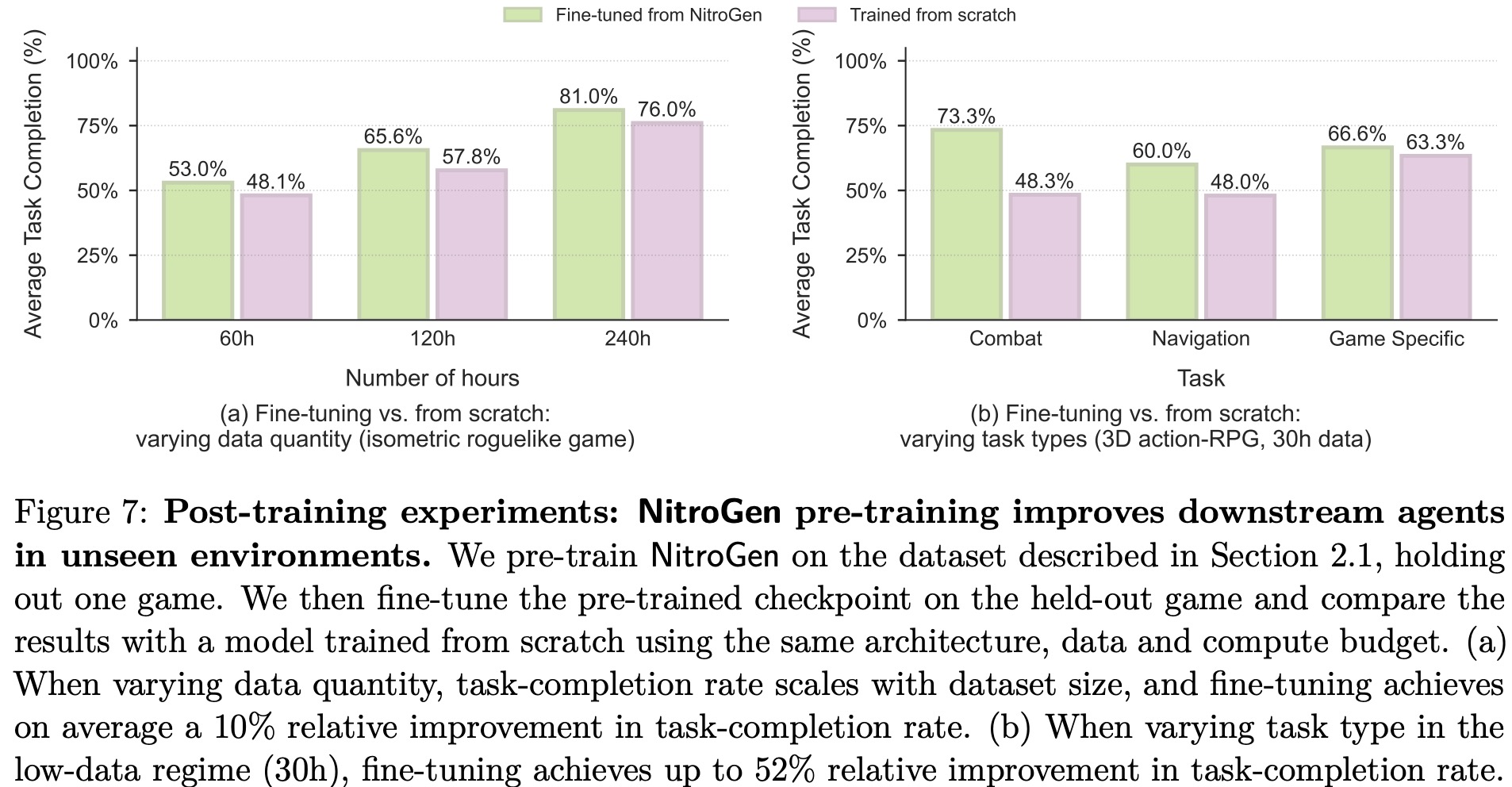

NitroGen is a general-purpose vision–action model for game-playing agents, trained on 40k hours of gameplay videos spanning over 1k games. The authors build an internet-scale dataset by automatically extracting player actions from publicly available gameplay videos, evaluate generalization on a multi-game benchmark, and train a single unified model using large-scale behavior cloning. The resulting agent performs well across very different game genres (3D action games, 2D platformers, and exploration in procedurally generated worlds) and transfers effectively to unseen games, achieving up to a 52% improvement in task success compared to training from scratch.

The approach

Internet-scale multi-game video-action dataset

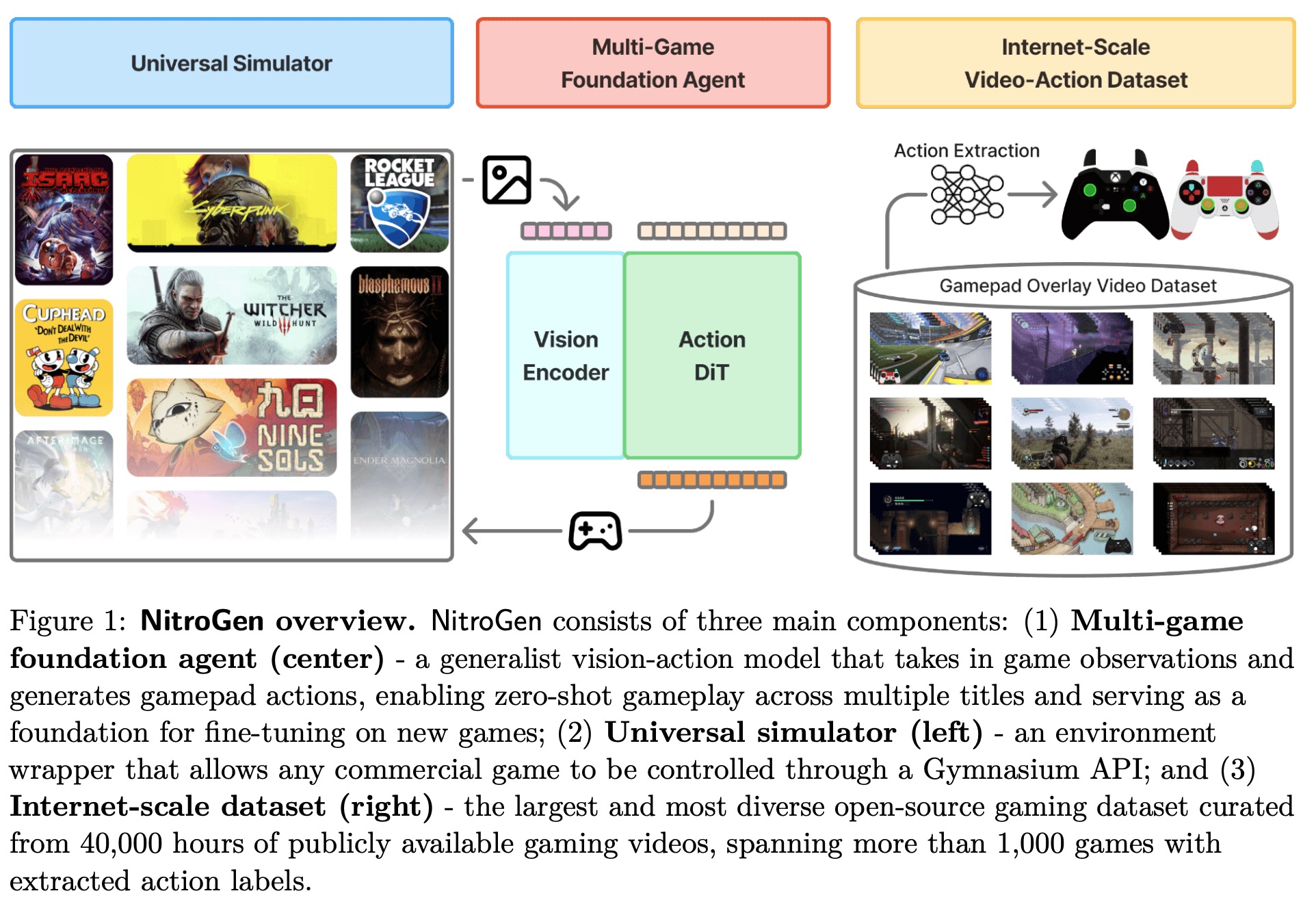

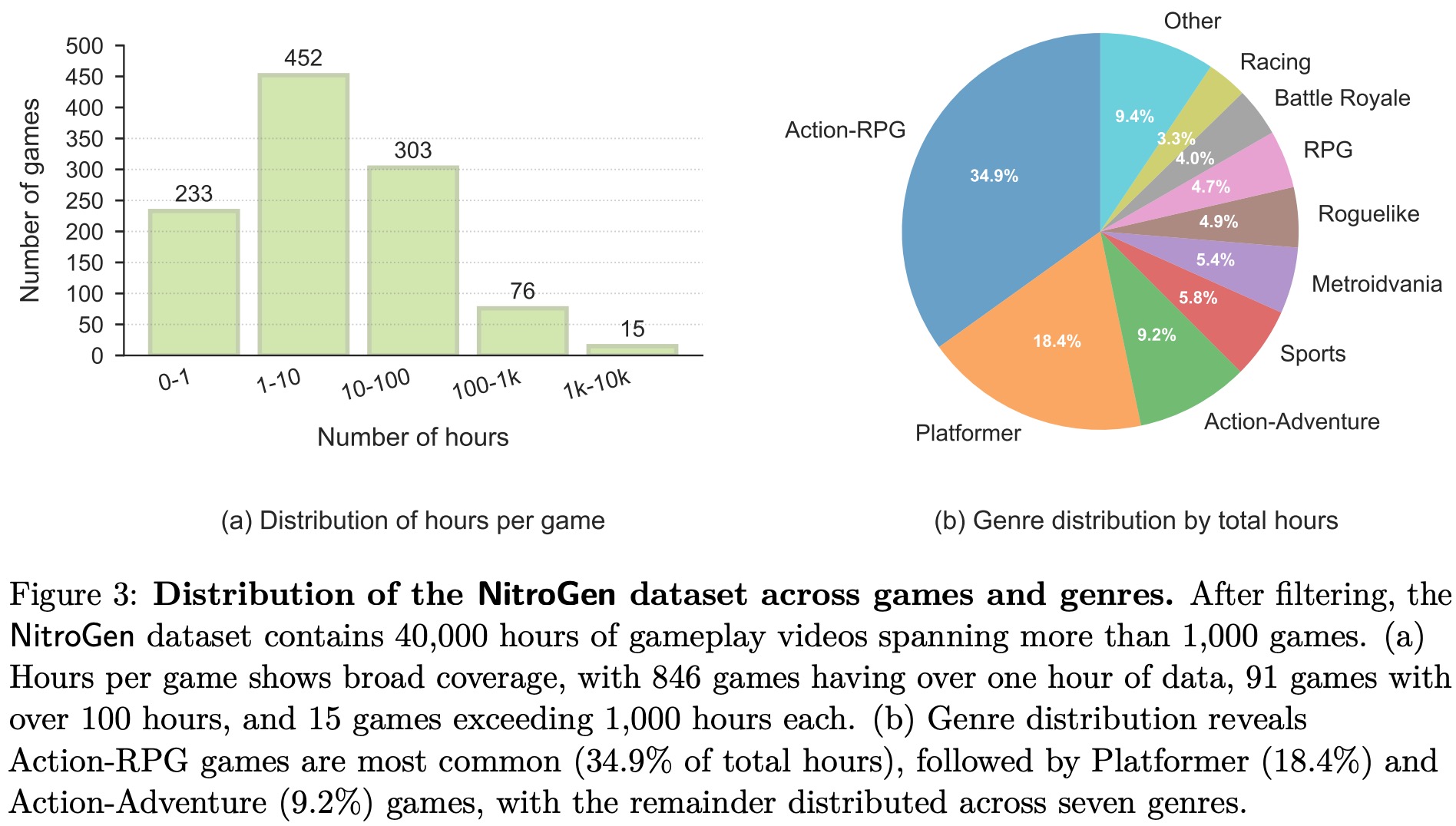

The authors address the lack of action labels in internet gameplay videos by using videos with on-screen controller overlays that show the player’s inputs. They collect 71k hours of such videos, covering over 1k games and a wide range of genres, skill levels, and creators, making it the largest labeled video–action dataset for games to date.

Player actions are extracted through a three-stage pipeline. First, template matching locates the controller overlay using feature matching against 300 known controller templates. Second, a fine-tuned SegFormer segmentation model parses button presses and joystick positions from consecutive frames, trained on millions of synthetic overlay examples to handle visual variability. Third, quality filtering removes low-signal segments to avoid bias toward “no-action” predictions and masks the controller overlay in the final data to prevent shortcut learning.

Evaluation suite

The authors develop a universal simulator that wraps commercial video games with a Gymnasium-style API, enabling frame-by-frame control without modifying game code. By intercepting the game engine’s system clock, the simulator allows synchronized interaction while preserving normal game physics, which the authors verify empirically.

Additionally, they define a unified observation and action space shared across all games: single RGB frames as observations, and a standardized controller-based action vector combining discrete button presses and continuous joystick inputs. This design facilitates direct policy transfer between different titles.

Using the simulator, they build a multi-game evaluation benchmark with 10 games and 30 tasks across both 2D and 3D genres. The tasks cover combat, navigation, and game-specific mechanics, with clearly defined start and goal states, and success measured via human evaluation.

NitroGen foundation model

NitroGen uses a generative, diffusion-style architecture to predict sequences of player actions from visual input. It applies flow matching to generate chunks of future actions conditioned on a single RGB game frame. The model builds on GR00T N1 design, using a SigLIP-2 vision transformer to encode 256×256 frames and a diffusion transformer to produce 16-action sequences per forward pass via self- and cross-attention over image tokens.

Empirically, conditioning on more than one past frame provides no benefit, so the model relies on a single context frame and multi-action chunk generation to improve temporal consistency. Training uses a standard flow-matching objective with image augmentations, AdamW optimization, a warmup–stable–decay learning-rate schedule, and exponential moving average weights.

Experiments

The action extraction pipeline achieves an average (R^2) of 0.84 for joystick positions and 0.96 accuracy for button presses, even under varied visual conditions.

A single NitroGen model trained on the full noisy internet dataset performs well across many games and tasks without fine-tuning, handling both memorization-friendly scenarios and zero-shot generalization in procedurally generated environments. This shows that robust multi-game policies can be learned despite noisy labels, visual clutter, and inconsistent controller setups.

Pre-training strongly improves downstream performance on unseen games. When fine-tuned with limited data, pre-trained NitroGen consistently outperforms training from scratch, with larger gains in games similar to the training distribution (up to 25% average improvement, and up to 52% on combat tasks). The benefits are strongest for broadly transferable skills like combat and navigation, while game-specific mechanics still require targeted fine-tuning.

paperreview deeplearning flowmatching cv diffusion