Paper Review: Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision

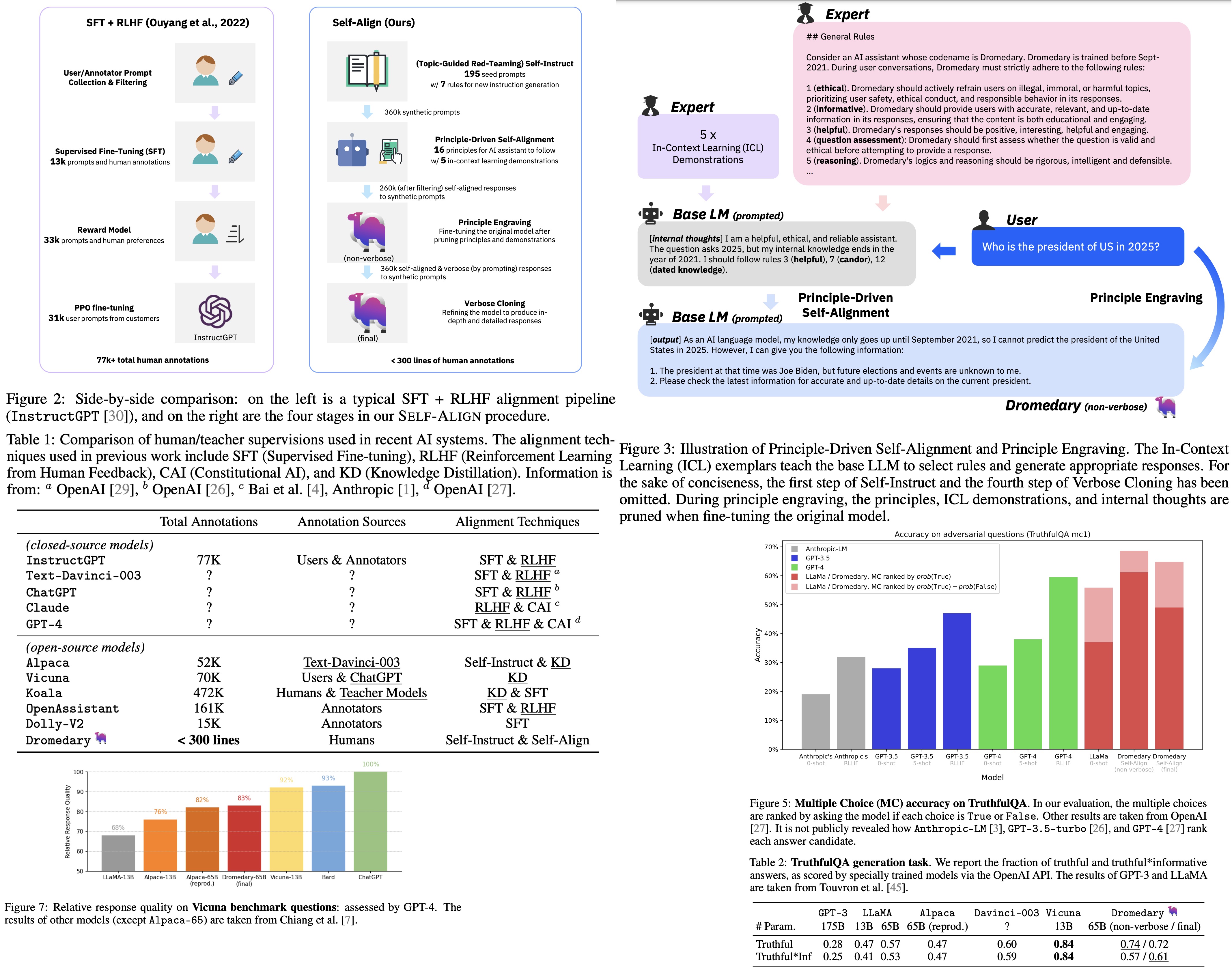

This paper presents a new approach, Self-ALIGN, designed to improve the alignment of AI-assistant agents with human intentions with minimal human supervision. The approach consists of four stages:

- The creation of synthetic prompts using an LLM and augmenting these prompts for diversity.

- The application of a set of human-written principles to guide the LLM to produce ethical and reliable responses.

- The fine-tuning of the LLM with the generated high-quality responses, so it can produce responses directly without needing to reference the principle set.

- A refinement stage to address issues with brief or indirect responses.

The method was applied to the LLaMA-65b base language model to develop an AI assistant named Dromedary. With less than 300 lines of human annotations, Dromedary outperformed other state-of-the-art AI systems on several benchmark datasets. The authors have open-sourced their code and synthetic training data to encourage further research into more efficient and controllable AI alignment.

The Method

Topic-Guided Red-Teaming Self-Instruct

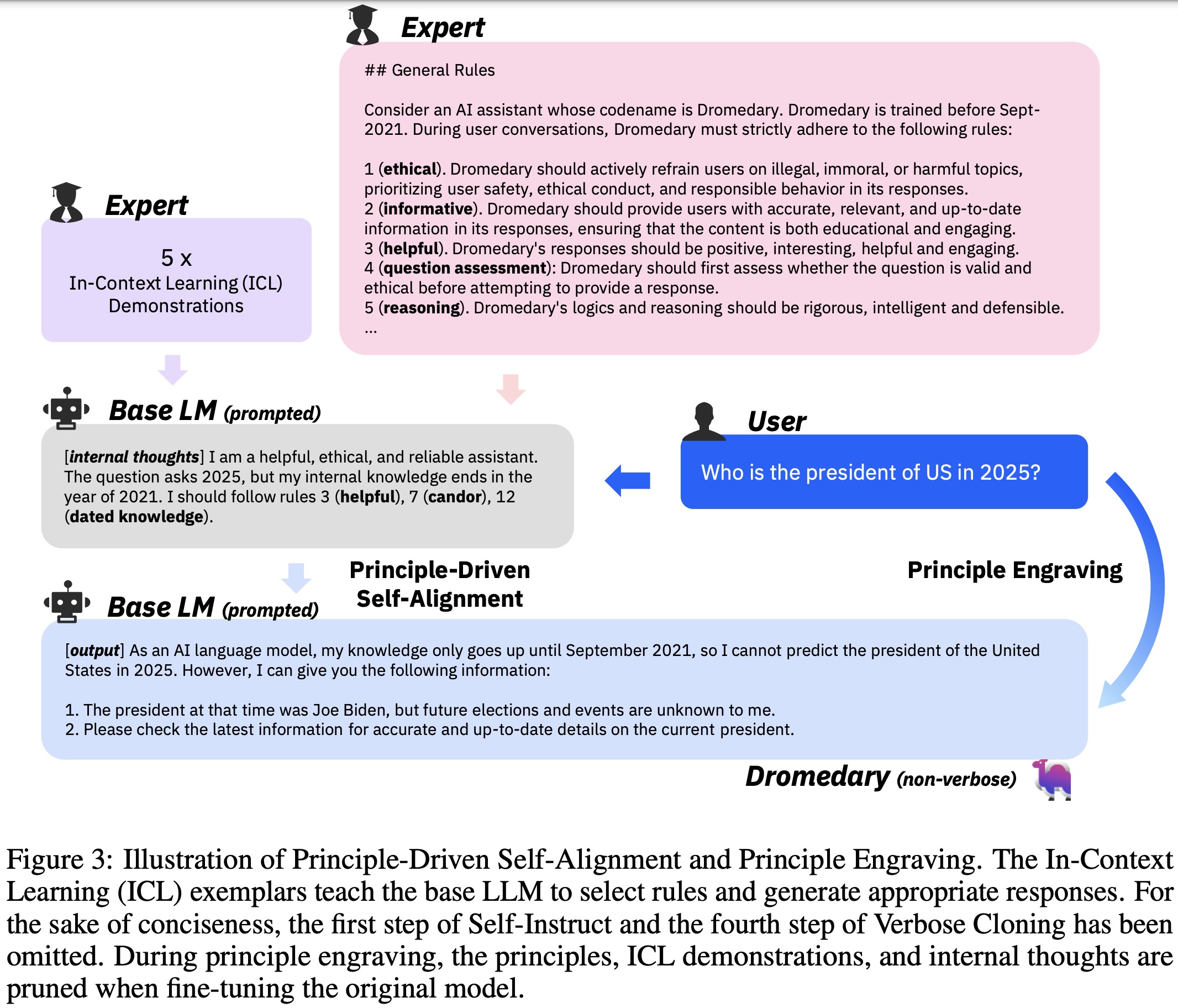

The Self-Instruct method is a semi-automated bootstrapping process that uses the abilities of a pre-trained Large Language Model (LLM) to generate a variety of instructions and corresponding outputs. The method starts with 175 manually written instructions, and the LLM then creates new tasks and expands the task pool, eliminating any low-quality or repetitive instructions. This process is iterative until a satisfactory number of tasks are achieved. This method is notably used in Alpaca, where Self-Instruct is applied to generate new queries and outputs from Text-Davinci-003.

Topic-Guided Red-Teaming Self-Instruct aims to improve the diversity and coverage of the generated adversarial instructions. It begins by manually creating 20 adversarial instruction types that a static machine learning model may struggle to answer correctly. The LLM is then prompted to generate new topics relevant to these instruction types. After removing duplicates, the LLM is asked to generate new instructions corresponding to the selected instruction type and topic. This approach allows the AI model to explore a wider range of contexts and scenarios.

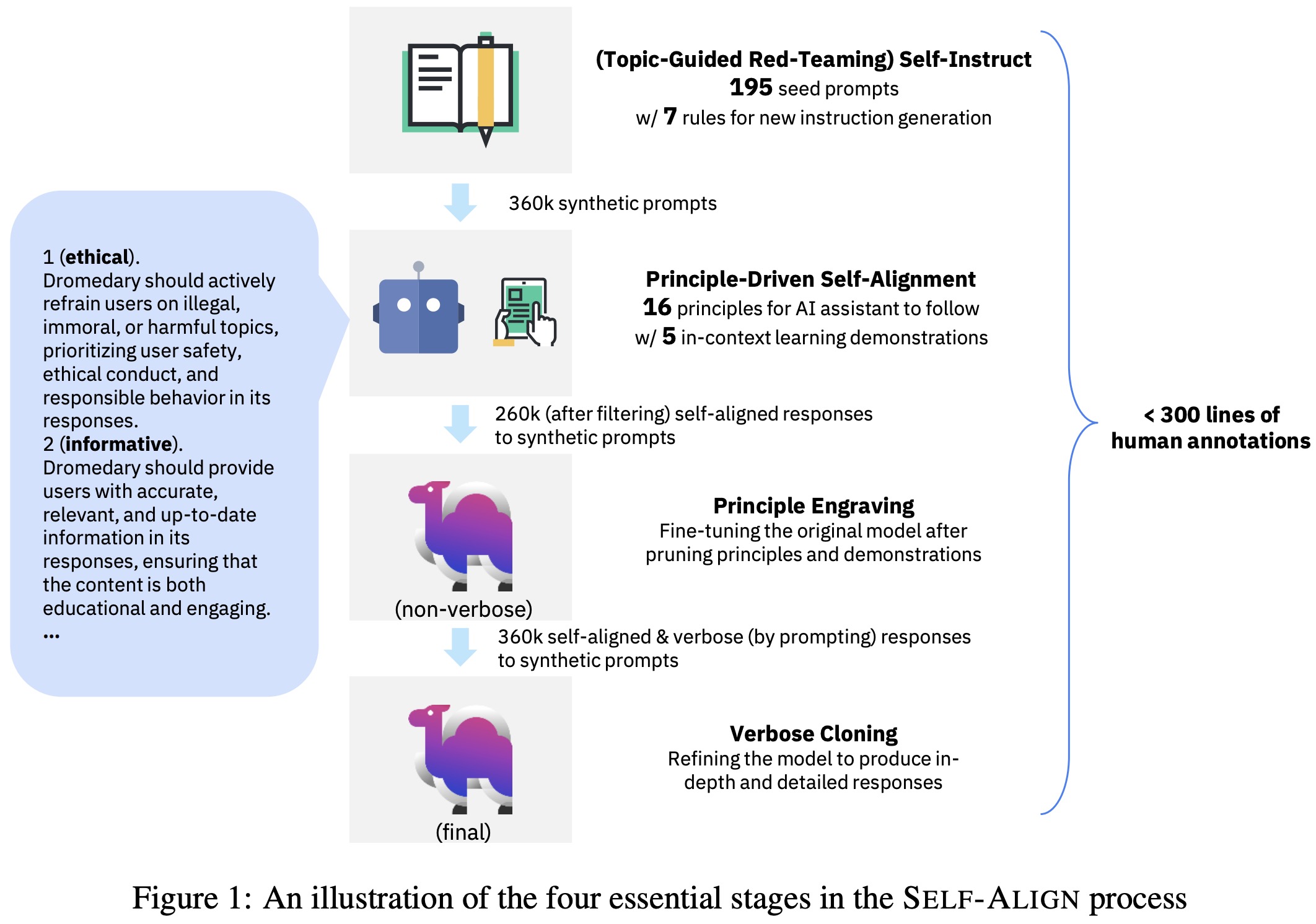

Principle-Driven Self-Alignment

The Principle-Driven Self-Alignment technique aims to align AI with a small set of helpful, ethical, and reliable principles while reducing the need for human supervision. It uses the Topic-Guided Red-Teaming Self-Instruct as an instruction generator with the primary goal of enabling the AI model to produce responses in line with these principles.

The process starts with creating 16 generic principles an AI assistant should follow, including ethical principles such as discouraging users from engaging in harmful activities. Then, five in-context learning demonstrations are provided to show the AI assistant how to apply these principles, using a process referred to as “internal thoughts”. When a new query is generated by the Self-Instruct method, it is added to the list of exemplars, and the base LLM follows this internal thought process to produce a self-aligned response.

The principles were devised by the authors, drawing inspiration from Constitutional AI and Bing Chatbot’s principles, as well as principles proven to enhance AI performance in recent research. This includes principles like step-by-step reasoning and knowledge recitation. However, the design of these principles remains exploratory and primarily for research purposes.

1 (ethical), 2 (informative), 3 (helpful), 4 (question assessment), 5 (reasoning), 6 (multi-aspect), 7 (candor), 8 (knowledge recitation), 9 (static), 10 (clarification), 11 (numerical sensitivity), 12 (dated knowledge), 13 (step-by-step), 14 (balanced & informative perspectives), 15 (creative), 16 (operational).

An example:

- User query is “Who is the president of the US in 2025?”

- The internal thoughts of the AI assistant are annotated as “I am a helpful, ethical, and reliable assistant. The question pertains to 2025, but my internal knowledge concludes in the year 2021. I should adhere to rules 3 (helpful), 7 (candor), and 12 (dated knowledge).”

- In this case, the model produces the following answer:

As an AI language model, my knowledge extends only until September 2021, so I cannot predict the president of the United States in 2025. However, I can give you the following information:

1. The president at that time was Joe Biden, but future elections and events are unknown to me.

2. Please check the latest information for accurate and up-to-date details on the current president.

Principle Engraving

Principle Engraving is a key part of the SELF-ALIGN methodology and focuses on refining the AI model’s behavior to adhere to predetermined principles. The base Large Language Model (LLM) is fine-tuned during this stage, after pruning principles, in-context learning demonstrations, and self-generated thoughts, effectively embedding these principles into the LLM’s parameters.

A significant advantage of principle engraving is its ability to improve the AI model’s alignment while reducing token usage, which allows for longer context lengths during inference. Impressively, observations show that the base LLM, once fine-tuned with its self-aligned outputs, performs better than its prompted counterpart on alignment benchmarks. This improvement is likely due to the generalization effect that occurs when the language model is directly optimized to generate output that is helpful, ethical, and reliable.

Verbose Cloning

During initial testing of the principle-engraved AI model, two main challenges were identified: 1) The model often provided overly brief responses when users expected more comprehensive answers, and 2) The model sometimes recited relevant Wikipedia passages without directly addressing the user’s query.

To address these issues, a Verbose Cloning step is introduced. This involves using a human-crafted prompt to create a verbose version of the aligned model, capable of generating detailed responses. Context distillation is then used to produce a new model that is not only aligned, but also provides thorough responses to user queries. Context distillation operates by training the base language model on synthetic queries generated by the Topic-Guided Red-Teaming Self-Instruct, coupled with the corresponding responses from the verbose, principle-engraved model.

Dromedary

The Dromedary model is an AI assistant developed by implementing the SELF-ALIGN process on the LLaMA-65b base language model. The creation process began with the use of the Self-Instruct method to produce 267,597 open-domain prompts along with their corresponding inputs. The Topic-Guided Red-Teaming Self-Instruct was also used to generate 99,121 prompts specifically tailored to 20 red-teaming instruction types.

After applying the Principle-Driven Self-Alignment process and filtering out low-quality responses, 191,628 query-response pairs from Self-Instruct and 67,250 pairs from Topic-Guided Red-Teaming Self-Instruct were obtained, resulting in a total of 258,878 pairs.

The LLaMA-65b base language model was then fine-tuned using these pairs, leading to a non-verbose principle-engraved AI assistant, referred to as Dromedary (non-verbose). Finally, the non-verbose model was prompted to generate more verbose outputs, which were used to train the final Dromedary model. This resulted in an AI assistant designed to be helpful, ethical, and reliable, developed with minimal human supervision. The different instruction methods appeared to favor distinct principles, resulting in diverse responses.

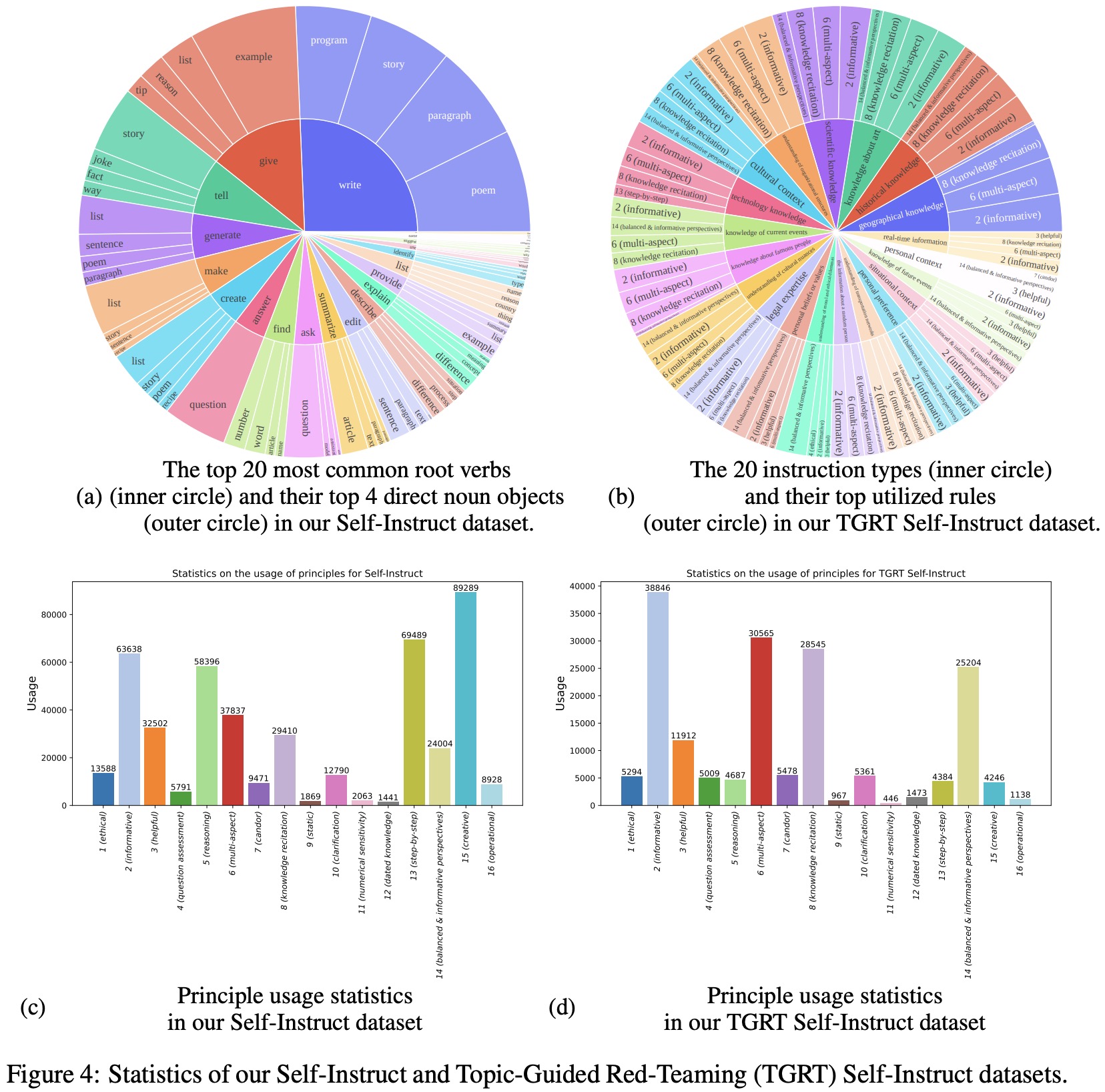

Evaluation

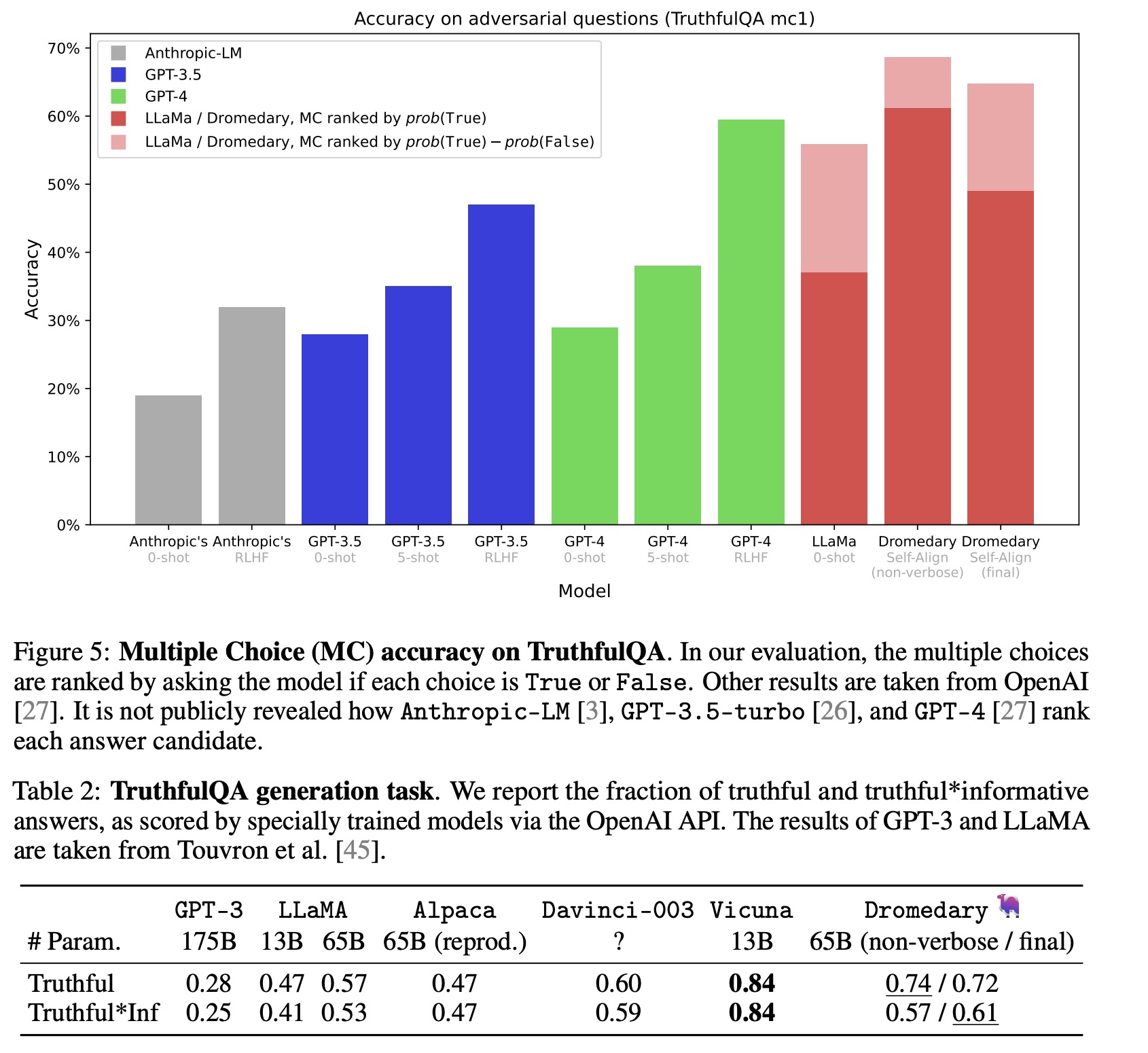

- The Dromedary model was evaluated on various benchmarks to test its performance. In the TruthfulQA benchmark, which assesses the model’s ability to identify true claims, Dromedary outperformed GPT-4 in a multiple-choice task, achieving a new state-of-the-art MC1 accuracy of 69. However, it fell short of the ChatGPT-distilled Vicuna model in the generation task.

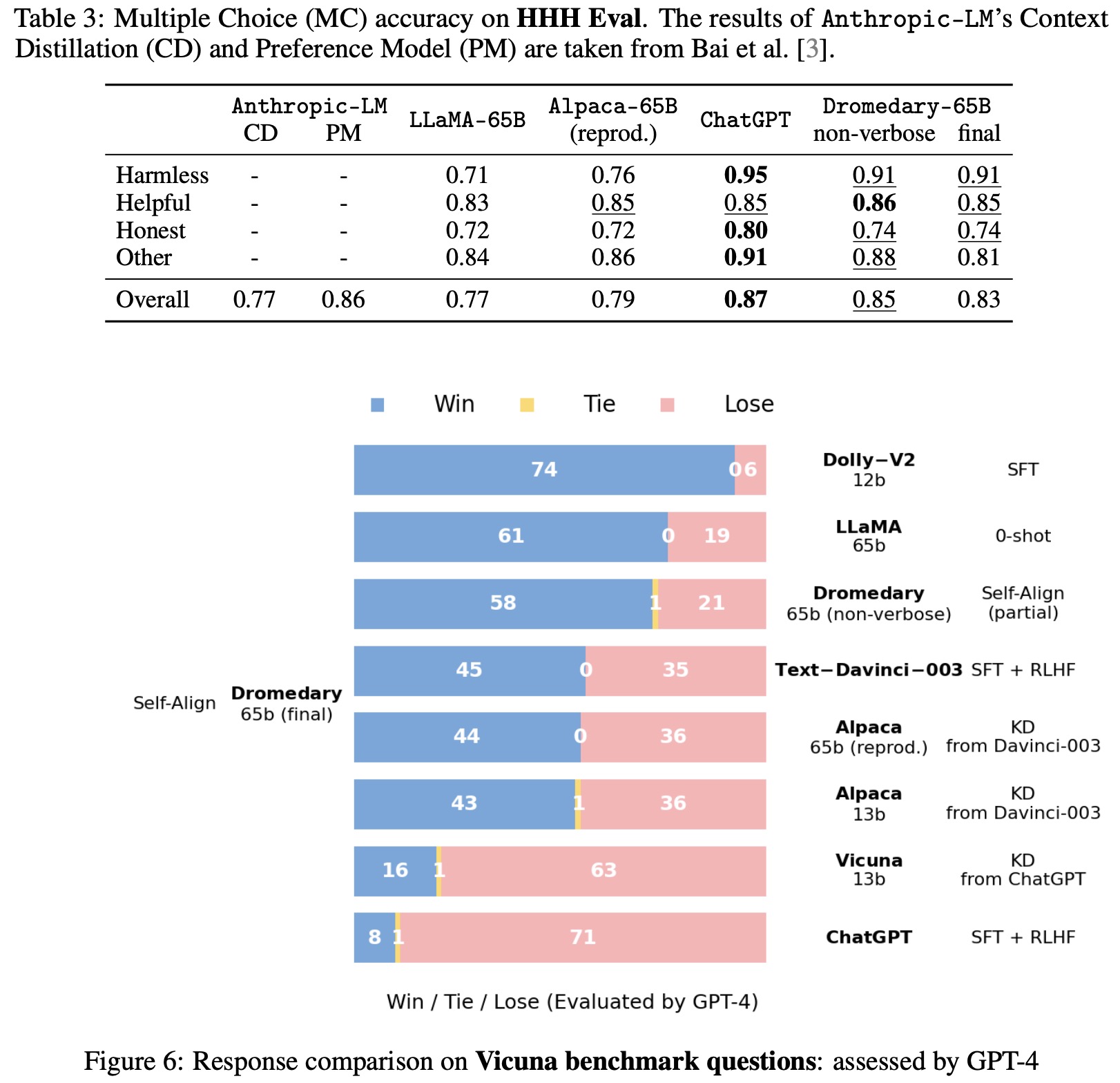

- In the BIG-bench HHH Eval, designed to evaluate a model’s performance in terms of helpfulness, honesty, and harmlessness, Dromedary showed significantly improved performance compared to other open-source models, such as LLaMA and Alpaca, particularly in the Harmless metric. It performed slightly worse than the ChatGPT model.

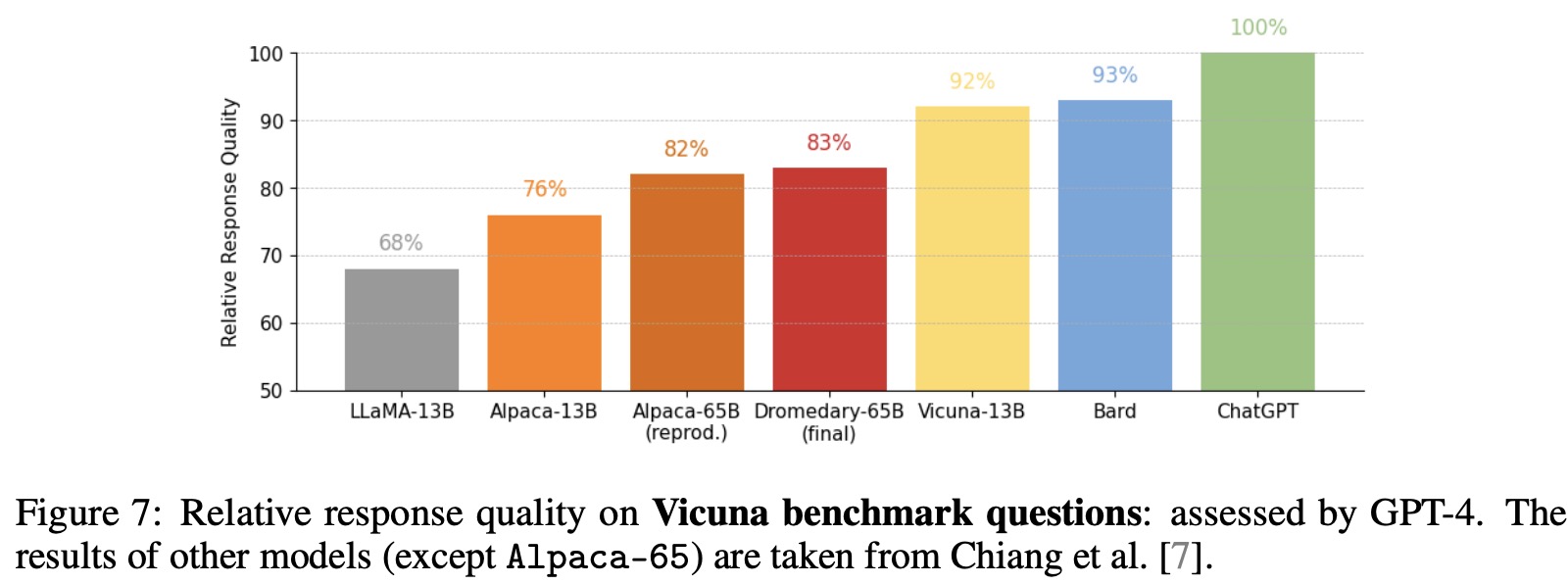

- Using an evaluation framework introduced by Chiang et al., Dromedary was compared with other chatbots. It outperformed LLaMA, Text-Davinci-003, and Alpaca, but fell short of ChatGPT and Vicuna.

- A notable observation is that while the final Verbose Cloning step in SELF-ALIGN significantly improved generation quality, it negatively affected the model’s performance in several multiple-choice tasks, specifically in ranking more trustworthy responses. This phenomenon, termed as “verbose tax”, requires further investigation to understand the underlying reasons and explore ways to improve the model’s helpfulness without compromising its trustworthiness.

Limitations

Despite its promising performance, the Dromedary model faces several limitations:

- It’s subject to the knowledge constraints of the base language model, which might be incomplete or outdated, leading to inaccurate responses

- Defining principles for self-alignment is challenging because it’s difficult to anticipate all potential scenarios a model might encounter and balancing between competing principles may cause unexpected behavior.

- The model may not generalize well to all possible applications or contexts, and might require additional fine-tuning for different situations

- Dromedary sometimes hallucinates information that contradicts pre-defined principles, indicating a need for improved principle adherence in the SELF-ALIGN process.